AI Act: Fundamental Rights Impact Assessments (FRIA) – Who, When, Why, and How to Ensure Ethical AI Deployment

The European Union (EU) has positioned itself as a leader in shaping the responsible development and use of Artificial Intelligence (AI) through the landmark AI Act which was approved on 21 May 2024 by the EU Council. Departing from a one-size-fits-all approach, this new legislation prioritises a risk-based framework. It focuses regulatory efforts on AI systems with the potential to cause significant harm. By emphasising these high-risk systems, the AI Act ensures public safety, fairness, and respect for fundamental rights in the face of rapidly evolving technology.

Once the AI Act is adopted, it will become effective 20 days after its publication in the Official Journal of the European Union. Upon the release of the final text, organisations that fall under the jurisdiction of the EU AI Act must ensure they have thoroughly mapped out their use of AI tools and have implemented appropriate governance and compliance frameworks.

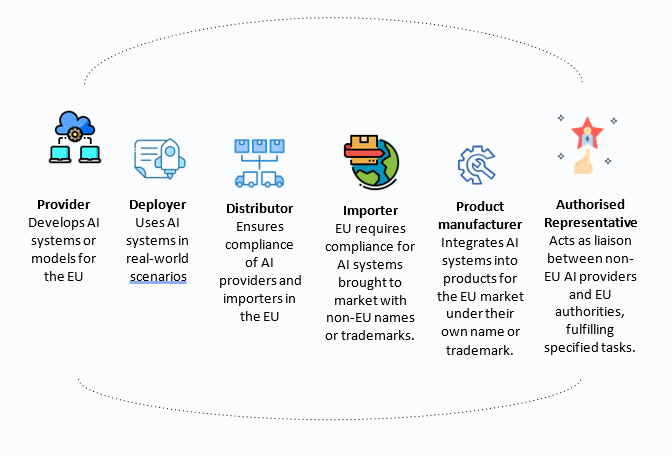

Which Organisations are Affected by the AI Act?

The AI Act impacts various players in the AI ecosystem targeting a wide array of stakeholders involved in the development, deployment, and distribution of AI systems like:

| AI Providers | Those developing or commissioning AI systems. |

| AI Deployers | Users of AI systems, excluding personal use. |

| Importers and Distributors | Entities bringing AI systems into the EU market. |

| AI Product Manufacturers | Producers of AI-enabled products. |

| Authorised Representatives | Representatives of non-EU AI providers within the EU. |

The AI Act’s extra-territorial reach also affects businesses outside the EU if they market AI systems or their outputs within the EU.

Non-EU providers of General Purpose AI models (GPAI) and high-risk AI systems besides other regulations must appoint an EU-based representative to liaise with regulators for which we discuss in our blog “EU AI Act: Understanding the Role of Authorised Representatives in the AI Value Chain”.

For all those key players the Act imposes different obligations based on the level of risk of the AI system, and one of the main requirements is conducting Fundamental Rights Impact Assessments (FRIA) for high-risk AI systems. Now we will go more deeply into understanding a FRIA.

What is a Fundamental Rights Impact Assessment (FRIA)?

A FRIA is a systematic process designed to evaluate the potential effects of a particular action, policy, or technology on the fundamental rights of individuals. It is particularly significant in contexts where new technologies, such as AI, are being deployed, as these technologies can have profound implications for human rights and ethical standards.

The FRIA aims to address potential risks posed by high-risk AI systems to individuals’ fundamental rights, going beyond the technical requirements of the EU AI Act, like conformity assessments. AI providers may not anticipate all deployment scenarios or the biases and manipulations that AI systems can introduce. Therefore, the FRIA serves as a process for organisations to justify and be accountable for why, where, and how they deploy high-risk AI systems.

However, conducting FRIAs presents challenges, as not all deployers of high-risk AI systems may possess the necessary capabilities to fully assess these risks. The specific scope of entities required to conduct FRIAs remains unclear. There are ongoing discussions about whether to assess all fundamental rights or focus on those most likely affected.

Developing effective methodologies to translate technical AI system descriptions into concrete analyses of abstract concepts like fundamental rights remains a significant hurdle but organisations must integrate FRIAs into their existing compliance frameworks to ensure effective governance.

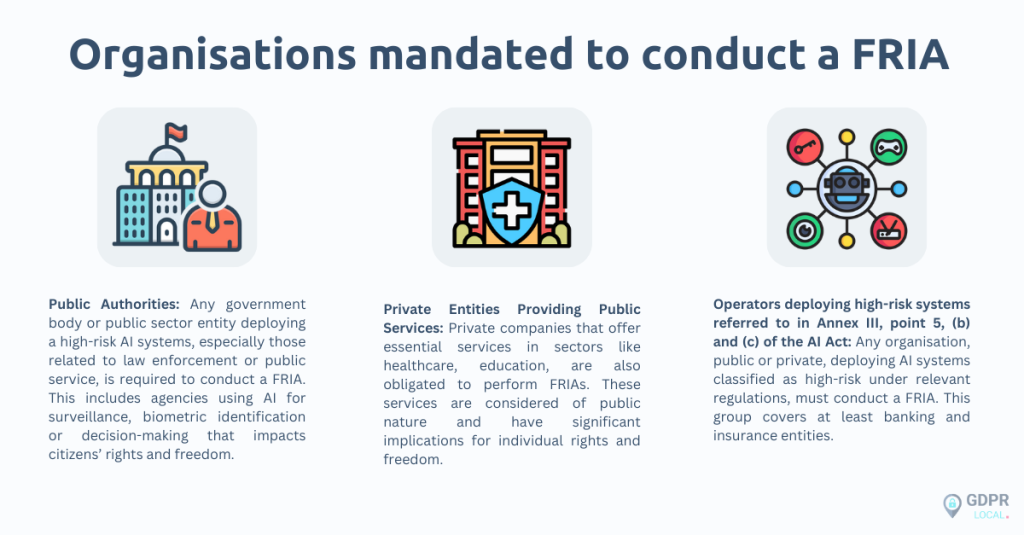

Who must Conduct a FRIA?

In most cases, the obligation to conduct a FRIA under the EU AI Act falls on the deployer of a high-risk AI system, not the provider. The organisations mandated to conduct a FRIA according to Article 27 of the AI Act are:

The provider, however, may play a role in supporting FRIAs in a couple of ways:

– Providing Information: The FRIA process may require information about the AI system’s technical specifications, intended use, and potential risks. The provider should be prepared to collaborate with the deployer to provide this information.

– Pre-existing Impact Assessments: The Act allows deployers to potentially rely on previously conducted impact assessments by the provider, assuming they comprehensively address the specific high-risk system and its deployment scenario. However, the deployer should ensure these assessments are relevant and up-to-date before relying on them.

What does a FRIA Involve?

A FRIA is a comprehensive assessment aimed at identifying and mitigating risks to fundamental rights. In general the key components include:

– Description of Use: Detailing the intended purpose of the AI system, including the processes and contexts in which it will be deployed.

– Duration and Frequency: Outlining the timeframe and frequency of the system’s use to understand its potential long-term impact.

– Affected Groups: Identifying the categories of individuals or groups likely to be affected by the AI system, taking into account specific demographics and contexts.

– Risk Assessment: Evaluating the potential risks to individuals’ rights and freedoms, including but not limited to privacy, freedom of expression, and non-discrimination.

– Human Oversight: Describing the measures in place to ensure human oversight, which is critical for maintaining accountability and transparency.

– Mitigation Measures: Listing the steps to be taken to mitigate identified risks, including governance structures and complaint mechanisms.

These are general requirements and the precise content of the FRIA will be defined depending on the type of the organisation and the AI system that should have been deployed.

When should a FRIA be Conducted?

Prior to Deployment

The AI Act emphasises proportionality in its approach to FRIAs. A FRIA should be conducted before the first use of a high-risk AI system. This proactive approach helps in identifying potential risks early and allows for the implementation of mitigation measures before the system is operational. Additionally it helps in avoiding unnecessary bureaucracy and allows for a focus on the most critical stage of an AI system’s life cycle.

Regular Updates

FRIAs should be revisited and updated whenever there are significant changes in the AI system or its operational context. Regular updates ensure that the assessment remains relevant and that new risks are promptly addressed.

Exemptions and Similar Cases

In cases where a similar FRIA has already been conducted, organisations may rely on previous assessments, provided they are still applicable. However, any significant changes necessitate a new or updated FRIA.

How to Conduct a FRIA?

Gather Information

Collect comprehensive data on the AI system, including its intended use, affected individuals, and potential risks. Engage with stakeholders to understand the broader impact of the system.

Risk Analysis

Use a structured approach to assess risks, categorising them as high, medium, low, or non-applicable. Consider factors like the probability and severity of potential harm.

Human Oversight and Mitigation

Define clear processes for human oversight and establish robust mitigation measures. This includes setting up internal governance structures and ensuring transparent complaint mechanisms.

Documentation and Reporting

Document the FRIA process thoroughly, including risk assessments, mitigation measures, and any updates. Submit the results to the relevant market surveillance authorities, using standardised templates where available.

Leverage Existing Assessments

If applicable, complement your FRIA with data protection impact assessments (DPIA) conducted under other regulations. This integrated approach helps streamline compliance efforts and ensures a holistic view of risks.

Relationship between FRIAs and DPIAs

The AI Act acknowledges the existence of Data Protection Impact Assessments (DPIAs) mandated under the General Data Protection Regulation (GDPR). To avoid duplicative efforts, the AI Act allows deployers to build upon existing DPIAs when conducting their FRIAs. This is particularly relevant for situations where the FRIA requirements overlap with data protection concerns.

However, it’s important to recognize the broader scope of FRIAs compared to DPIAs. While DPIAs primarily focus on the right to data protection under Article 8 of the EU Charter of Fundamental Rights, FRIAs encompass a wider range of fundamental rights, such as fairness, non-discrimination, and privacy.

The inherent complexity of AI systems further amplifies the challenge of conducting comprehensive FRIAs, as it necessitates a nuanced understanding of these various fundamental rights and the potential impact of the AI system on them.

Challenges in Implementation

Scope: The Fundamental Rights Charter has six chapters covering diverse fundamental rights like dignity, freedoms, equality, solidarity, citizens’ rights, and justice. Assessing how high-risk AI systems impact these rights requires deep understanding of member states laws and EU case law. Specific laws, such as media and medical regulations, further complicate assessments by imposing criteria for restricting rights. Variations in how Member States interpret rights add additional complexity. Focus may be on key rights like data protection and non-discrimination, but selecting relevant rights remains a challenge.

Governance: Organisations might leverage existing governance structures for FRIAs, but expertise in AI’s impact on human rights is crucial. In-house legal teams might lack this specialised knowledge, requiring either internal upskilling or external consultants with expertise in AI and human rights law.

Simplifying FRIA Completion

Recognizing the potential complexity of FRIAs, the Act introduces a helpful tool to streamline the process for deployers.

The European AI Office will develop a standardised future “FRIA Template Questionnaire” along with an automated tool for doing the assessment under the AI Act. This questionnaire is designed to guide deployers through the FRIA process in a clear and efficient manner.

The automated tool can potentially automate some aspects of the assessment, reducing the administrative burden on deployers.

The Future FRIA Template Questionnaire can play a vital role by clarifying the expected depth of analysis and guiding companies in building internal capacity or seeking external support for conducting effective FRIAs.

A well-designed FRIA framework is essential, as companies will face regulatory scrutiny for non-compliance.

Conclusion

Conducting a FRIA under the AI Act is not just a regulatory requirement but a critical step in ensuring that high-risk AI systems are deployed responsibly and ethically. As organisations prepare for its implementation, understanding who needs to conduct FRIAs and how to effectively navigate the assessment process is critical.

FRIAs ensure that AI deployments uphold ethical standards and respect individual rights, addressing potential risks comprehensively. With the forthcoming “Future FRIA Template Questionnaire” and automated tools, the EU aims to streamline compliance efforts, helping organisations integrate robust governance frameworks.

This proactive approach not only fosters transparency but also underscores the EU’s commitment to responsible AI development in a rapidly evolving technological landscape.