California’s Senate Bill 1047: Key Takeaways on California’s AI Safety Bill

In a significant step toward regulating advanced AI development, California’s legislature on August 29, 2024, passed Senate Bill 1047 (SB-1047) also known as “Safe and Secure Innovation for Frontier Artificial Intelligence Models Act”.

SB-1047 is just one of many AI-focused bills currently under consideration in state legislatures across the U.S., as lawmakers work to establish initial guidelines for AI technologies. This surge in legislative activity reflects growing concerns about the potential dangers posed by the rapid advancement of AI systems, including generative AI. In 2023, a significant portion of the 190 AI-related bills introduced addressed issues like deepfakes, generative AI, and the application of AI in employment practices.

The California’s AI Safety Bill, originally introduced on February 7, 2024, aims to authorize a developer of a covered model to determine whether a covered model qualifies for a limited duty exemption before initiating training of that covered model. SB-1047 sets forth specific requirements for developers before they begin training AI models and before those models can be used commercially or publicly. Below, we break down the key obligations and compliance pathways for developers of AI systems, focusing on the pre-training and pre-deployment phases.

Scope of the Bill

The legislation specifically focuses on “covered AI models” that meet one or both of the following requirements:

Before January 1, 2027:

1. AI model trained using more than 10^26 integer or floating-point operations and costing over $100 million, based on average cloud computing prices at the start of training, or

2. AI model created by fine-tuning a covered model using a quantity of computing power equal to or greater than three times 10^25 integer or floating-point operations.

On and after January 1, 2027:

Unless otherwise regulated, a “covered model” includes:

(I) An AI model trained with computing power determined by the Government Operations Agency, costing over $100 million.

(II) An AI model fine-tuned from a covered model using computing power exceeding a threshold set by the Government Operations Agency, costing over $10 million.

SB-1047 introduces a regulatory framework for advanced AI systems, specifically targeting models trained using over 10^26 floating-point operations (FLOP). This computing threshold aligns with the one set in the Biden administration’s Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence.

The bill primarily targets large-scale, high-cost AI models—like those developed by major tech companies for tasks such as advanced language processing, image recognition, or other complex AI functionalities. Examples of the AI models that can be covered may include Large Language Models (LLMs) like GPT-4 or PaLM 2, Image Generation Models like DALL-E 2 or Stable Diffusion if their training or fine-tuning processes meet the specified computational and cost thresholds.

Obligations for AI System Developers

Before Starting to Train a Covered AI Model

Under the California’s senate bill, before a developer starts the initial training of a covered model, they must adhere to a detailed list of security and operational safeguards:

Implement cybersecurity protections

The developer must establish strong administrative, technical, and physical cybersecurity measures. These measures should prevent unauthorized access or misuse of the AI model both during and after its training. These protections are particularly important against sophisticated cyber threats like advanced persistent threats (APTs), which are often highly skilled and persistent malicious actors targeting sensitive AI systems.

Full ‘shutdown’ capability

The bill mandates that developers integrate a full shutdown mechanism that can stop the AI system entirely if it’s deemed necessary for safety reasons. The shutdown mechanism should also take into account any potential disruptions it might cause, especially to critical infrastructure. For instance, if the AI system is being used in healthcare, finance, or energy, abruptly shutting it down could have wide-reaching consequences, so these risks need to be carefully considered.

Develop a safety and security protocol

Developers are required to write a detailed safety and security protocol that outlines how they will prevent their AI model from causing or enabling harm. This protocol should:

– Specify safeguards that will prevent the model from being used in harmful ways, such as facilitating cyberattacks or creating weapons.

– Include objective compliance requirements, so that both the developer and third parties can clearly verify whether the model is adhering to the safety protocol.

– Establish testing procedures to regularly evaluate whether the model, or its derivatives, pose a risk of harm. This includes assessments of whether post-training modifications (fine-tuning) could introduce harmful capabilities.

– Outline the conditions under which the model might be shut down.

– Detail how the protocol will be updated and modified as the model evolves and risks change over time.

To ensure accountability, senior staff must be assigned the responsibility of overseeing the compliance of the safety protocol. This includes managing the teams working on the model, monitoring implementation, and reporting on progress.

A complete, unredacted copy of the safety protocol must be kept for as long as the AI model is in commercial or public use, and for five years after that. Any changes or updates made to the protocol must also be recorded and maintained.

Developers must also publish a redacted version of the safety protocol and send a copy to the Attorney General. The only acceptable redactions are those needed to protect public safety, trade secrets, or confidential information. This transparency allows the public and regulators to understand the safety measures in place without exposing sensitive data.

Annual review and updates

Every year, developers must review and update the safety protocol to reflect changes in the AI model’s capabilities, as well as evolving industry standards and best practices. This ongoing process ensures the protocol remains relevant and effective.

Computer cluster policies

A person operating a computing cluster- a network of interconnected computers functioning as a single system with significant computing power – must establish policies and procedures for instances where a customer uses resources sufficient to train a covered model. They must gather identifying information from customers, evaluate whether the customer plans to use the cluster for training a covered model, and ensure the ability to quickly shut down any resources being used to train or operate models under the customer’s control. The bill does not specify what “prompt” means in this context.

Additional safeguards

SB-1047 requires developers to implement any other necessary safeguards that prevent their models from posing unreasonable risks of causing critical harm.

Before Deploying an AI Model for Commercial or Public Use

Once the model is trained, developers must meet additional requirements before deploying the AI system in a public or commercial setting. These steps help ensure that the model doesn’t cause harm when used outside of the development environment.

Risk Assessment

Before releasing the model for commercial or public use, developers must assess whether the system could reasonably cause or enable critical harm. This includes analyzing the potential for the model to be used in harmful ways, such as enabling cyberattacks or other dangerous activities. Developers must ensure that their models cannot:

– Autonomously engage in dangerous behaviors, such as conducting cyberattacks.

– Be weaponized for mass harm, including the creation of chemical, biological, radiological, or nuclear weapons.

– Cause mass casualties or substantial financial damage (over $500 million) through their outputs.

The bill holds developers accountable for AI models that have the potential to act with limited human oversight, preventing situations that could result in destructive outcomes.

Developers must keep detailed records of the tests they perform to evaluate the model’s risks. This documentation needs to be thorough enough that an independent third party could replicate the testing process. The goal here is to ensure the testing was done properly and to hold developers accountable for their risk assessments.

Implement safeguards

Based on the risk assessment, developers must implement appropriate safeguards to prevent the AI system from causing or enabling harm. These safeguards should be tailored to the model’s specific capabilities and risks.

Ensure traceability

The bill requires developers to ensure that the actions of the AI model and its derivatives can be accurately attributed to the system itself. If the AI model is involved in any harmful activities, its developers must be able to trace and attribute those actions back to the model. This ensures accountability and helps authorities identify the source of any harmful behaviors.

Restrict use based on risk

If the developer concludes that there is an unreasonable risk that the AI model could cause or enable critical harm, they are prohibited from deploying it for commercial or public use. This restriction is crucial for preventing dangerous systems from being used in real-world applications without proper oversight.

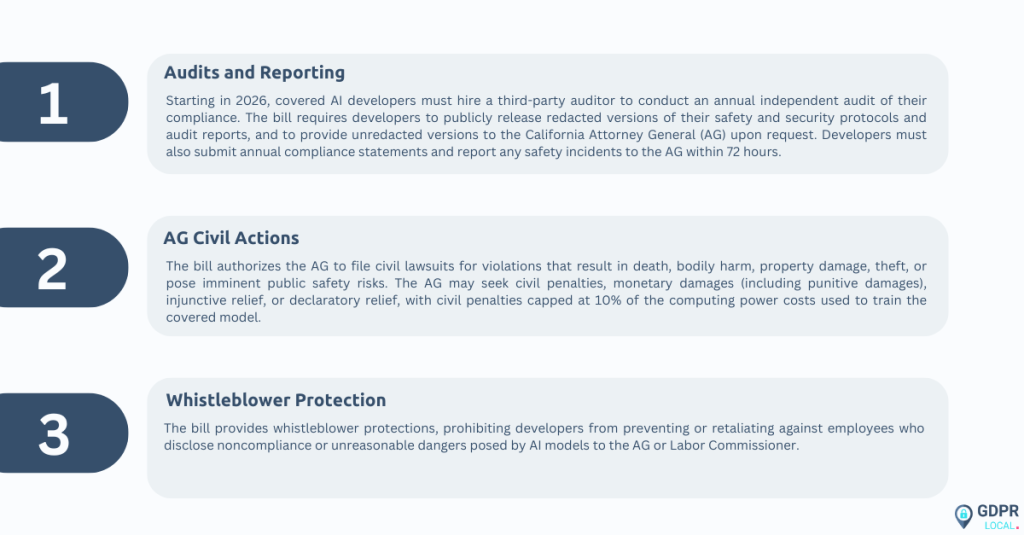

Enforcement and Compliance

The California’s senate bill establishes enforcement authority and guidelines to ensure compliance which includes:

Legislative Journey and Next Steps

The bill, introduced in February 2024, navigated through various committees and readings in both the Senate and Assembly. After amendments in August, the bill passed the California Legislature on August 29, 2024, and was returned to the Senate, where it passed following concurrence on the amendments. The bill is now awaiting the Governor’s signature to become law.

Stay informed on the latest developments in AI legislation, privacy state laws across the U.S., and more. Follow GDPRLocal for timely updates and expert insights on AI regulations and compliance trends.