International AI Regulations – Current AI Landscape

Artificial intelligence (AI) is changing our world faster than we could have imagined, from virtual assistants and recommendation algorithms to more complex applications in healthcare and finance. But with this rapid growth, there’s also a need to make sure AI is used responsibly. Around the globe, countries and organisations are working together to set standards for how AI should be developed and used.

Some of these agreements are legally binding, meaning they’re enforceable by law, while others are more like guidelines, offering voluntary standards to encourage good practices.

Here’s a look at some of the key international regulations shaping the future of AI, and which ones carry legal weight.

Key International Initiatives

The Council of Europe Framework Convention on Artificial Intelligence and Human Rights, Democracy and the Rule of Law

The Council of Europe Framework Convention on Artificial Intelligence and Human Rights, Democracy and the Rule of Law – (AI Treaty in further text) is a significant step forward – it’s the first legally binding international treaty on AI. This means that countries that sign into this convention are committing to making it a part of their national laws.

Purpose of the document. The AI Treaty ensures that all stages of AI development and use are aligned with fundamental values like human rights, fairness, transparency, and safety. It aims to support responsible AI innovation while providing a strong ethical foundation for AI technologies. By being technology-neutral, it avoids over-regulating specific technologies and focuses on guiding principles.

The AI Treaty, which became open for signatures on September 5th, 2024, sets out rules and responsibilities for AI use. It highlights key principles, rights, safeguards, and requirements for managing risks and impacts, including:

| Fundamental Principles | Remedies, Procedural Rights, and Safeguards | Risk and Impact Management Requirements |

| • Human dignity and individual autonomy (Everyone’s rights and freedom come first.) • Equality and non-discrimination (Treat everyone fairly—no exceptions!) • Respect for privacy and personal data protection (Your personal data is yours to control.) • Transparency and oversight (AI should be open about how it works.) • Accountability and responsibility (People and organisations must own up to their AI’s actions.) • Reliability (AI should work reliably and earn our trust.) • Safe innovation (New tech should prioritise safety and well-being.) | • Keep clear records about how AI systems are used. (Everyone deserves to know how AI impacts them!) • Allow people to challenge AI decisions. (If you disagree with an AI’s choice, you can speak up!) • Provide ways to file complaints with the right authorities. (You have the right to voice your concerns!) • Ensure protections and rights for those affected by AI. (Everyone deserves support when AI affects their freedoms.) • Let users know when they’re interacting with AI instead of a person. (It’s good to know when you’re talking to a bot!) | • Regularly assess the risks and impacts of AI on rights and democracy. (Think of it as regular check-ups for AI!) • Implement prevention measures based on these assessments. (Proactive steps keep everyone safer.) • Allow for bans on risky AI applications. (There should be limits on what AI can do if it’s dangerous.) |

Who Is Covered? The AI Treaty applies to both public and private sectors but does not cover national security, defence and science and research directly. However, AI applications in these areas must still respect democratic principles.

Monitoring and Compliance. A “Conference of the Parties”, consisting of representatives from signatory countries, will review how well the treaty is being followed and can hold public hearings on AI issues.

Countries that sign this convention are expected to incorporate these principles into their own laws, with an understanding that there will be some oversight to ensure compliance. While the convention started with European nations, it’s open to other countries worldwide, so it could have a broad impact. The Council of Europe, which includes 46 member countries, has also involved observers like the U.S., Canada, and Israel, who may follow suit with similar standards.

The AI Treaty will enter into force once specific conditions are met. According to the terms outlined in Article 30, it will become effective on the first day of the month following a three-month waiting period, starting from the date when at least five signatories (including three Council of Europe member states) complete their formal ratification process.

For any additional country that joins after the initial entry into force, the Convention will take effect for that country three months after they submit their ratification.

Legally Binding. This is the first legally binding international treaty on AI.

Impact: Signatory countries are committed to adopting national laws that align with these principles.

OECD’s Principles for Trustworthy AI

The Organisation for Economic Co-operation and Development (OECD) has played a key role in AI governance. In 2019, it introduced its Principles for Trustworthy AI, addressing key topics like human rights, fairness, transparency, and accountability.

Unlike the Council of Europe’s AI Treaty, however, the OECD’s principles are non-legally binding. They act as voluntary guidelines rather than enforceable laws. While countries that adopt them do so on their own accord, these principles are widely respected and have influenced other AI frameworks.

Updated in 2024 to reflect recent AI developments, the OECD AI Principles remain a global reference, shaping policies around the world. Supported by over 50 countries, they provide a shared framework for fostering ethical, innovative AI that aligns with human rights and democratic values, promoting economic growth, social well-being, and environmental sustainability, while addressing associated risks.

These principles are based on five key values:

1. Inclusive Growth, Sustainable Development, and Well-being

AI should enhance human capabilities, promote inclusion, reduce inequalities, and protect the environment.

2. Human Rights and Democratic Values

AI must respect human dignity, privacy, non-discrimination, and freedom, ensuring safeguards for fairness, social justice, and transparency.

3. Transparency and Explainability

AI actors must provide clear, understandable information about AI systems, their capabilities, limitations, and outputs, enabling people to challenge decisions made by AI.

4. Robustness, Security, and Safety

AI systems must be secure, safe, and reliable throughout their lifecycle, ensuring that they function appropriately and do not pose risks to safety.

5. Accountability

AI actors must be accountable for the functioning of AI systems, including the ability to trace decisions, manage risks, and address potential biases or violations of rights.

The Bletchley and Seoul Declarations: Collaborative Commitments

The Bletchley declaration signed in November 2023 and Seoul Declaration signed in May 2024 are key international agreements focusing on the safe and responsible development of AI technologies. These declarations set a framework for global collaboration and the development of best practices in AI safety, although they are not legally binding.

These Declarations have been signed by a diverse group of countries, including the United States, United Kingdom, China, and members of the European Union, as well as emerging economies such as Brazil, India, and Nigeria. Other signatories include Australia, Canada, Israel, Japan, France, Germany, South Korea, and Italy, along with nations like Kenya, Indonesia, Singapore, and the UAE. This wide representation emphasises the global commitment to advancing AI safety through international cooperation and shared principles, involving countries from across continents and regions.

The Bletchley Declaration (this link leads to the chapter above instead of the Declaration) reflects the shared commitment of several countries to ensure AI safety. The declaration, while non-binding, serves as an important step toward international cooperation in the realm of AI safety.

Key initiatives include:

• International cooperation: Promoting collaboration between countries to advance AI safety research.

• Shared principles: Establishing common principles and best practices for AI safety.

• Risk assessment and mitigation: Developing tools to assess and manage AI risks.

Seoul Declaration builds on the Bletchley Declaration with a focus on more concrete commitments to advance AI safety.

Key initiatives include:

• AI Safety Institutes: The establishment of international institutes dedicated to AI safety research.

• International cooperation: Enhanced coordination between nations for sharing AI safety best practices and conducting joint research.

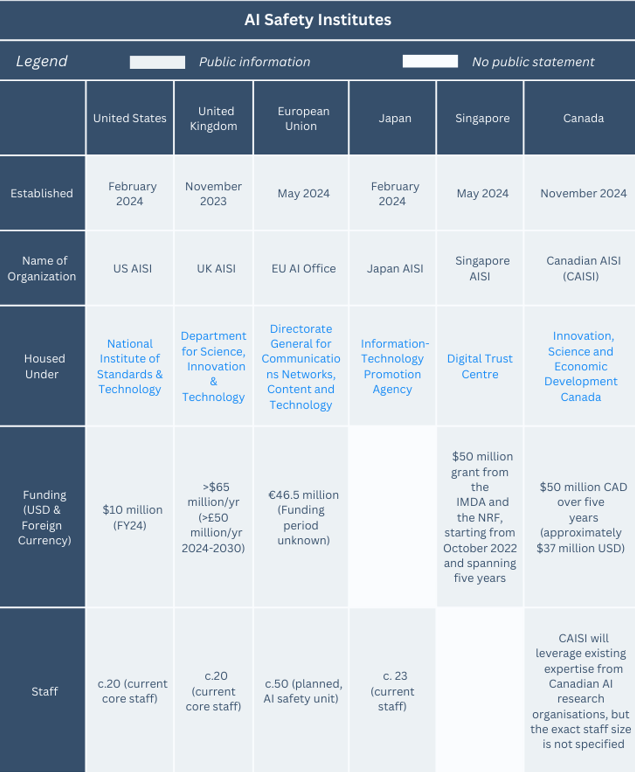

The AI Safety Institutes established as part of the international efforts to advance AI safety, include initiatives from several nations and the current state of established AI Institutes is:

These institutes are focused on advancing AI safety research, fostering international cooperation, and developing voluntary frameworks for the safe deployment and testing of AI models.

While the institutes themselves are a direct product of these collaborative efforts, they are still in the process of operationalizing their networks. The goal is to create a cooperative environment where research, safety testing, and information about AI models can be shared globally. The network aims to accelerate the understanding of AI’s potential risks and its safe application.

Although specific details for the next AI safety summit in 2025 have not yet been officially announced, it is expected to continue fostering international collaboration and dialogue on AI safety. These declarations and summits will contribute to the ongoing development of global frameworks for AI governance and safety.

Hiroshima AI Process by the G7: Practical Guidelines without Legal Enforcement

The G7 nations (the U.S., U.K., Japan, Canada, Germany, France, and Italy) also launched their Hiroshima AI Process in 2023. In an effort to harness the vast potential of AI while addressing the challenges and risks posed by advanced AI systems, including foundational models and generative AI, the “Hiroshima AI Process Comprehensive Policy Framework” was created.

This framework aims to establish a global, inclusive governance structure for AI that promotes innovation and safeguards against harm. At the core of this initiative are two key documents:

• Hiroshima Process International Guiding Principles for All AI Actors and

• Hiroshima Process International Code of Conduct for Organizations Developing Advanced AI Systems.

These documents outline best practices for AI developers across academia, civil society, the private sector, and government to follow a risk-based approach in their work. They emphasise the importance of transparency, accountability, and respect for human rights, urging organisations to assess and mitigate risks throughout the lifecycle of AI systems.

The guiding principles will continue to evolve, with ongoing reviews and consultations to ensure they remain relevant in light of rapid technological advancements. Through these efforts, the Hiroshima AI Process seeks to build trust in AI and ensure its responsible development for the benefit of society.

Like the OECD principles, these guidelines are not legally binding, so they serve more as best practices. However, because the G7 includes some of the world’s most influential countries, the Hiroshima AI Process has the potential to shape international standards for responsible AI use.

The Global Privacy Assembly: Resolution on Generative AI

The Global Privacy Assembly (GPA) is an international forum for privacy and data protection authorities and officials from around the world. Its members include representatives from data protection agencies, privacy commissioners, and regulators from various countries. The Assembly brings together regulators to exchange information, share best practices, and address global privacy issues, including those related to AI, data breaches, and surveillance. It promotes data protection standards, fosters cooperation, and provides resolutions and guidelines to enhance privacy protections globally. It meets annually, influencing international privacy policy development.

In October 2023, the 45th Closed Session of the Global Privacy Assembly addressed the growing concerns surrounding generative artificial intelligence (AI) systems, which are increasingly being deployed across various sectors. This resolution highlights the need for responsible development and deployment of generative AI technologies to ensure the protection of fundamental rights, including privacy and data protection.

The Assembly stresses the importance of applying principles such as privacy by design, data minimization, and transparency in the creation and operation of these systems. It emphasises that developers and deployers must conduct thorough data protection impact assessments (DPIA), secure informed consent, and establish legal bases for processing personal data.

Furthermore, the resolution calls for close cooperation with data protection authorities to mitigate risks and safeguard individuals’ rights, especially in high-risk scenarios such as automated decision-making and vulnerable populations. The Assembly also reaffirms the importance of integrating ethical considerations and environmental sustainability in AI practices, ensuring that generative AI respects human dignity and operates in compliance with legal and ethical standards.

The resolutions and guidelines issued by the Global Privacy Assembly are not legally binding, but they influence global privacy policies and encourage best practices among regulators and organisations.

United Nations General Assembly Resolution

The United Nations General Assembly’s resolution, passed in May 2024, focuses on unlocking the potential of AI while ensuring it is developed and used responsibly. Titled “Seizing the opportunities of safe, secure, and trustworthy artificial intelligence systems for sustainable development,” the resolution recognizes the rapid growth of AI and its transformative impact on society. It highlights the need for global cooperation to create AI systems that are not only innovative and efficient but also align with ethical principles and contribute to the achievement of the Sustainable Development Goals (SDGs).

The resolution stresses the importance of developing AI in a way that respects human rights, is transparent, and prioritises safety. It encourages member states to adopt common standards for AI, ensuring that these technologies are used to benefit society as a whole – improving lives, boosting economies, and protecting the environment.

At the same time, it calls for measures to manage the risks associated with AI, including safeguarding privacy, preventing biases, and ensuring accountability for AI systems’ actions. While being non-legally binding, this resolution serves as a call to action for the international community to come together, set clear standards, and ensure that AI is used ethically and safely for the benefit of all people, leaving no one behind. It’s about making sure that as AI continues to shape our world, it does so in a way that’s both positive and aligned with our shared values.

Another important document by the UN is Governing AI for Humanity: Final Report, a report issued in September 2024 by the United Nations (UN) through its High-level Advisory Body on Artificial Intelligence.

The report highlights the need for a global approach to managing AI. It stresses that while AI has the potential to improve many areas of our lives, such as healthcare, agriculture, and energy, it also brings risks like bias, privacy concerns, and job disruptions. The report calls for cooperation between countries and organisations to create rules and frameworks that ensure AI benefits everyone, rather than just a few. It emphasises the importance of inclusivity, fairness, and accountability in AI governance, so that no one is left behind as this technology continues to grow and impact our world.

The EU’s AI Act: Leading the Charge in AI Regulation

The EU’s AI Act is a game-changer in how AI is regulated. It’s one of the most detailed and enforceable sets of rules for AI systems, and it directly affects how AI is developed and used within the EU. This law is legally binding, meaning businesses must follow its rules if they want to operate in the EU. While the AI Act isn’t a global treaty, its influence will likely spread far beyond Europe, simply because the EU is such an important market.

The law divides AI systems into different risk categories, from low to high, with stricter requirements for higher-risk applications. For example, companies need to be transparent about how their AI works, take steps to avoid risks, and ensure their systems are safe and ethical. This means that developers, users, and anyone else involved with AI will have clear responsibilities.

Even businesses outside of Europe are likely to pay attention to the EU’s rules. To access the European market, they may adopt these guidelines, ensuring their AI is up to par with EU standards. In doing so, the AI Act could help set a global standard, influencing how other countries regulate AI in the future.

Why This Matters

As AI becomes more advanced, having consistent standards in place can protect people’s rights and ensure that the technology is used safely and ethically. The legally binding agreements, like the Council of Europe’s Framework Convention and the EU AI Act, will likely have a significant impact, as they set hard rules that companies and governments must follow.

On the other hand, non-binding agreements like the OECD’s Principles or the Hiroshima Process are influential but rely on countries and companies choosing to adopt these guidelines on their own. They don’t carry legal penalties, but they help create a shared understanding of what responsible AI should look like.

For businesses, staying updated on these regulations is essential. Countries may adopt these guidelines in their own laws over time, and being proactive in aligning with these principles can prevent issues down the road. In the end, whether binding or voluntary, these efforts reflect a global push to make sure AI serves everyone fairly and safely.

This evolving landscape shows that AI regulation isn’t just about creating restrictions; it’s about setting a foundation for technology that supports human well-being. By working together, countries are laying the groundwork for a future where AI is not only innovative but also aligned with our shared values and goals.

Disclaimer: This blog post is intended solely for informational purposes. It does not offer legal advice or opinions. This article is not a guide for resolving legal issues or managing litigation on your own. It should not be considered a replacement for professional legal counsel and does not provide legal advice for any specific situation or employer.