The EU AI Act – Guide for Businesses

What is the EU AI Act?

The EU Artificial Intelligence Act (EU AI Act) is the first legal framework in the world designed specifically for AI. Its goal is to ensure that AI systems used across the European Union are trustworthy, transparent, and aligned with fundamental rights and democratic values.

Whether your company is developing AI tools, integrating them into existing services, or simply using third-party systems, this legislation may apply to you – regardless of whether you are based within the EU or outside of it.

Risk-Based Classification and Regulatory Requirements

The EU AI Act introduces a risk-based approach, classifying AI systems into categories:

• High-risk systems, often found in sensitive areas like recruitment, education, healthcare, or law enforcement, must comply with strict requirements.

• Systems presenting limited risk, such as customer service chatbots, will need to meet basic transparency standards.

• The majority of AI tools, considered low-risk, will not be subject to regulatory restrictions – but businesses using them are still expected to operate responsibly.

The law sets clear expectations for how AI should be designed, tested, implemented, and monitored – not just by developers, but also by those who place or use such systems in the EU market.

Who needs to comply?

The EU AI Act applies broadly. It covers companies located in the EU, as well as any organisation outside the EU that

provides AI systems or general-purpose models to EU-based users.

That means if you’re building AI models, offering SaaS platforms powered by AI, or simply using tools like ChatGPT or image-generation systems in a way that could affect individuals in the EU, the Act is likely relevant to your

operations.

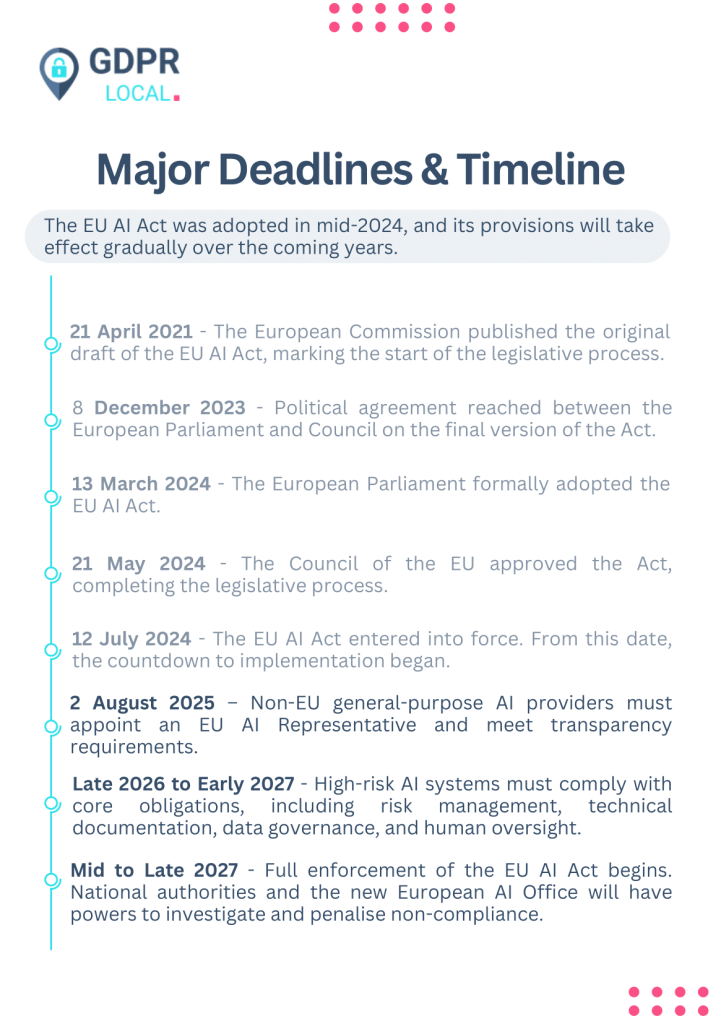

Deadlines

One of the first major deadlines was 2 August 2025. From this date, providers of general-purpose AI models,

such as large language models and foundation models, must comply with transparency and documentation obligations. If the provider is located outside the EU, they must also appoint an Authorised AI Representative based within the EU.

Later deadlines will apply to high-risk AI systems, with full enforcement expected by 2027. These include requirements for risk management, technical documentation, data governance, and human oversight.

Major Deadlines & Timeline

What should you be doing now?

The first step is to gain a clear picture of how your organisation interacts with AI. Are you using AI in customer service, decision-making, recruitment, or marketing? Are you developing your own models or integrating general-purpose tools into your services?

If you’re based outside the EU and offer general-purpose AI models to EU users, you will need to appoint an Authorised AI Representative by August 2025. This representative acts as your local point of contact for EU regulators and ensures that required documentation is in place.

For companies using or distributing high-risk systems, it is important to begin preparing documentation and assessing how you collect, store, and monitor training and input data.

Internal policies, risk assessments, and clear human oversight procedures will be expected. Even where AI is used in lower-risk areas, you must be transparent with users and provide disclaimers where needed.

What are the risks of ignoring the EU AI Act?

Non-compliance can be costly.

The EU AI Act includes serious penalties, with fines reaching up to €35 million or 7% of global annual turnover – whichever is higher. Beyond financial risks, companies may face service bans, legal action, and reputational damage.

It’s also important to note that enforcement is expected to be strict. National regulators and the new European AI Office will be tasked with monitoring and responding to violations. Businesses that act early will be better positioned to respond to audits or complaints down the line.

Key points to keep in mind

• You don’t need to be based in the EU for this regulation to affect you.

• General-purpose AI models, such as those powering content generators and chatbots, are specifically covered.

• Transparency and accountability are core principles of the regulation. If you use AI in your business, make sure you understand what needs to be disclosed to users.

Compliance is not just a legal obligation – it’s an increasingly competitive advantage in a privacy-conscious market.

How we support our clients

We help businesses understand where they fall under the EU AI Act and guide them through their compliance obligations. Whether you’re building, providing, or simply using AI tools, we offer expert support to help you prepare.

From assessing your risk category to supporting documentation and training, we’re here to help you manage this new compliance landscape with clarity and confidence.

GPDRLocal AI Services