Deepfakes and the Future of AI Legislation: Ethical and Legal Challenges

Updated: August 2025

The advent of AI has brought about revolutionary tools that are reshaping how we interact with technology and information. Among these advancements, deepfakes stand out as one of the most intriguing yet controversial innovations. By leveraging machine learning techniques such as deep neural networks, deepfakes enable the creation of highly realistic fake videos, audio, and images that replicate people’s appearances and voices with remarkable precision.

What began as a technological novelty has quickly evolved into a phenomenon with profound implications. While deepfake technology holds exciting potential in areas such as entertainment, education, and the creative industries, it also poses significant risks. From doctored celebrity videos to fabricated political statements, the misuse of this technology is eroding trust in media and public discourse.

As deepfakes become more sophisticated and accessible, their potential for abuse has sparked debates over ethical considerations and legal accountability. The key challenge lies in harnessing the positive potential of deepfake technology while minimising its harmful impacts.

This blog delves into the ethical dilemmas, legal challenges, and future approaches to regulating this rapidly evolving technology.

What Are Deepfakes?

Deepfakes are synthetic media created using AI, particularly through techniques like deep learning and generative adversarial networks (GANs). They produce highly realistic images, videos, or audio that mimic the appearance and voice of real people.

Originally developed for creative purposes, such as enhancing films or creating digital characters, deepfake technology has become increasingly accessible. Today, even basic technical skills and free tools can generate convincing deepfakes.

While this innovation opens doors for creativity and entertainment, it also raises serious concerns about privacy, trust, and the authenticity of information.

The Problem with Deepfakes: Abuses and Misuse

While deepfakes hold promise in creative industries, their misuse poses significant challenges. The technology has been weaponised in various ways, threatening individuals, organisations, and societal trust.

Deepfake-Driven Misinformation

Deepfakes have become a powerful tool for spreading misinformation. By creating fake videos of public figures making false statements, they can manipulate public opinion, disrupt elections, or incite conflict. Their realism makes it harder for people to discern truth from fiction, eroding trust in news and media.

Harassment and Privacy Violations

Deepfakes are often exploited for malicious purposes, such as creating non-consensual explicit content. This has disproportionately targeted women, violating their privacy and causing emotional harm. Additionally, deepfakes have been used in blackmail schemes, impersonation scams, and to defraud businesses.

The accessibility of deepfake tools exacerbates these problems, allowing malicious actors to create and disseminate harmful content with ease. The resulting damage, whether reputational, emotional, or financial, highlights the urgent need for ethical and legal safeguards.

Ethical Challenges Posed by Deepfakes

Deepfakes present profound ethical challenges that extend beyond their technical capabilities. These issues impact trust, privacy, and societal values, raising questions about how we live in a world where reality can be so easily manipulated.

Trust in Media and Information

Deepfakes erode trust in media by making it increasingly difficult to distinguish between authentic and fabricated content. This undermines the credibility of legitimate news and amplifies the spread of disinformation. As people become more sceptical of what they see and hear, the broader trust in digital communication and public discourse is at risk.

Societal Impact on Vulnerable Groups

The misuse of deepfake technology often targets vulnerable groups, particularly women and minorities. Non-consensual explicit deepfake content has become a tool for harassment and exploitation, disproportionately affecting women. Similarly, the technology can be used to impersonate or defame individuals, exacerbating existing inequalities and power imbalances.

The ethical dilemma lies in striking a balance between innovation and accountability. While the potential for deepfakes in art, education, and entertainment is undeniable, their misuse demands a good framework of ethical guidelines and public awareness.

Legal Challenges and Regulatory Efforts

The rapid proliferation of deepfake technology has outpaced the development of legal frameworks needed to address its misuse. While some countries have begun to introduce regulations, significant gaps remain in addressing the global challenges posed by deepfakes.

Existing Laws and Their Limitations

Current legal systems often rely on traditional frameworks, such as:

• Defamation/Libel Laws: These can be used if a deepfake makes false statements that damage someone’s reputation. However, proving intent to harm can be difficult.

• Copyright Infringement: If a deepfake uses copyrighted material (e.g., footage from a movie), copyright laws may apply. But this doesn’t address the core harm of misrepresentation.

• Privacy Laws: These can be relevant if a deepfake uses someone’s likeness without consent, but often don’t fully cover the emotional distress or broader societal impact.

• Cybersecurity Laws: These might address the use of deepfakes in phishing scams or other online fraud, but not the broader issue of misinformation.

However, these laws are often insufficient to address the unique challenges posed by deepfakes, such as their anonymity, global reach, and potential to cause widespread harm before being identified as fake. Additionally, these laws were not specifically designed for deepfakes, so they often fail to fully address the unique harms they cause. Proving direct, measurable harm from a deepfake can also be difficult, particularly in cases involving misinformation. Enforcement presents another challenge: the global nature of the internet makes it hard to enforce national laws against deepfakes that are created or hosted in other countries.

Case Study: China’s Personal Information Protection Law (PIPL)

China has taken proactive steps to regulate deepfake technology under its Personal Information Protection Law (PIPL). The law requires explicit consent before an individual’s image, voice, or personal data can be used in synthetic media. This regulation aims to prevent identity theft, privacy violations, and reputational harm caused by deepfakes.

In addition to the PIPL, China has implemented new rules that mandate the labeling of deepfake content, helping users identify manipulated media. These measures serve as an example of how targeted legislation can mitigate the ethical and legal risks associated with deepfakes.

Furthermore, under the “Deep Synthesis Provisions,” which took effect in January 2023, deepfake service providers are required to identify users and review their content. This added layer of regulation places further obligations on those creating and distributing deepfake media, reinforcing China’s commitment to tackling the challenges posed by this technology.

Global Trends in AI Legislation

Globally, there is growing recognition of the need for AI-specific legislation, and governments are starting to address the challenges posed by deepfakes, with varying degrees of regulation:

• The EU has been a forerunner in AI and digital media regulation with the Artificial Intelligence Act (AI Act) and the Digital Services Act (DSA). The AI Act outlines specific requirements for high-risk AI systems, which may encompass deepfake technology. It also mandates transparency, requiring disclosure that content is AI-generated. The DSA also includes provisions to address harmful content online, though deepfakes are not specifically mentioned. Efforts are underway to integrate provisions that address the manipulation of media through AI.

• The US has a patchwork of state laws addressing specific deepfake harms. There have also been federal bills proposed, such as the DEEP FAKES Accountability Act, but none have yet passed. However, the current state is that there is no comprehensive federal legislation specifically targeting deepfakes, and various states, such as California, have implemented laws criminalizing the creation and distribution of deepfakes with the intent to harm individuals, especially in cases involving pornography and election interference.

• The UK has taken a more cautious approach but has recognized the potential for deepfakes to cause significant harm. The Online Safety Bill includes provisions that require platforms to take responsibility for harmful content, including deepfakes. However, there is still no specific regulation directly addressing deepfakes in the UK.

• Australia has incorporated deepfake technology into its Media and Communications Laws, focusing on defamation and privacy. The government has also proposed a broader review of media regulation in the context of emerging technologies, which could lead to more targeted efforts against deepfakes.

• Other Countries: Many other countries are in the early stages of developing AI strategies and regulations, with deepfakes being a key concern.

• Given the borderless nature of the internet, international cooperation is key in regulating deepfakes. Organizations such as the United Nations and OECD are exploring frameworks for global regulation, though no universally adopted standards currently exist.

While existing laws provide some recourse against the harms of deepfakes, there’s a growing trend towards specific AI legislation that directly addresses the unique challenges posed by this technology. The approaches vary across the globe, but common themes include transparency, focus on harm, and risk-based regulation. Addressing deepfakes requires a delicate balance between fostering innovation and protecting individuals and society from harm. While some progress has been made, much remains to be done to establish comprehensive, enforceable regulations. Effective regulation will require a combination of legal, technical, and educational efforts.

Why This is Important for Companies and Their Responsibilities

The rise of deepfakes highlights the transformative and disruptive potential of AI technologies. For companies, understanding and addressing the challenges and opportunities posed by deepfakes is not just a matter of ethics – it’s a strategic imperative. Businesses must be aware of the potential risks that deepfakes bring, including reputational damage, erosion of consumer trust, and legal liabilities. At the same time, they can harness the technology’s creative potential responsibly to drive innovation and competitiveness.

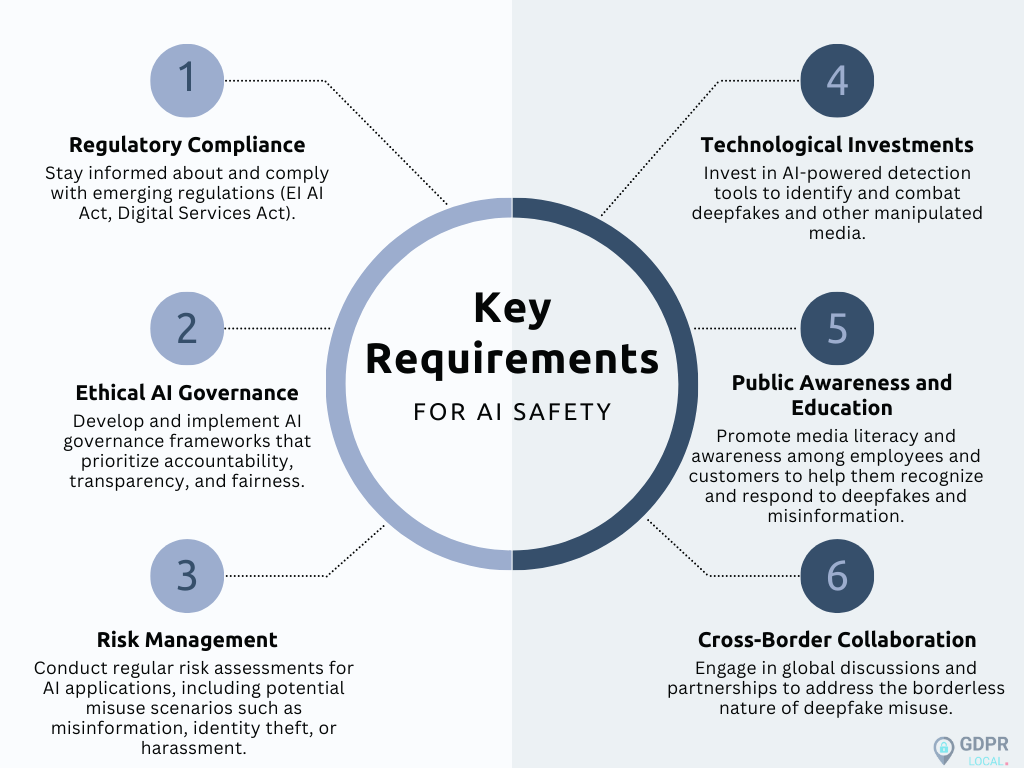

To mitigate the risks and capitalize on the opportunities, companies must fulfill several key requirements:

By addressing these requirements, companies can not only protect themselves from potential risks but also demonstrate leadership in ethical AI practices. In doing so, they strengthen trust with stakeholders, foster innovation responsibly, and contribute to shaping a future where AI technologies, including deepfakes, are leveraged as forces for good.

Balancing Innovation and Regulation

As deepfake technology evolves, striking a balance between its potential benefits and risks is essential. Achieving this requires coordinated efforts from policymakers, technologists, and the public.

Encouraging ethical innovation involves promoting positive applications in education, healthcare, and entertainment while discouraging malicious uses. Governments must strengthen regulations by mandating the labelling of synthetic media, enforcing consent requirements, and addressing cross-border challenges through international cooperation.

Public awareness and media literacy are also crucial in combating manipulation, enabling individuals to identify and question suspicious content. Additionally, investing in AI-powered detection tools can ensure trust in digital content by staying ahead of malicious actors.

Conclusion: Building a Safer AI-Driven World

Deepfakes represent both the promise and peril of AI. While they open doors to creative and transformative possibilities, their potential for harm cannot be ignored. Addressing the challenges posed by deepfakes necessitates a multifaceted approach that integrates ethical considerations, robust legal frameworks, and technological solutions.

The journey to a safer AI-driven world is ongoing, but with proactive measures and collective action, it is a goal within reach. Deepfakes are a reminder that technology is only as beneficial as the values guiding its use.

Disclaimer: This blog post is intended solely for informational purposes. It does not offer legal advice or opinions. This article is not a guide for resolving legal issues or managing litigation on your own. It should not be considered a replacement for professional legal counsel and does not provide legal advice for any specific situation or employer.