Europe AI Act: New Regulations on Artificial Intelligence

The Europe AI Act is a regulation aimed at ensuring the safe and ethical development of AI in the EU. It categorizes AI systems by risk level and imposes strict requirements on high-risk AI to protect users’ rights and safety. In this article, you’ll discover the key elements of the Act, its enforcement mechanisms, and its impact on AI innovation.

Key Takeaways

• The Europe AI Act establishes a comprehensive framework for the regulation of AI technologies within the EU, focusing on risk-based classifications to ensure safety and ethical deployment.

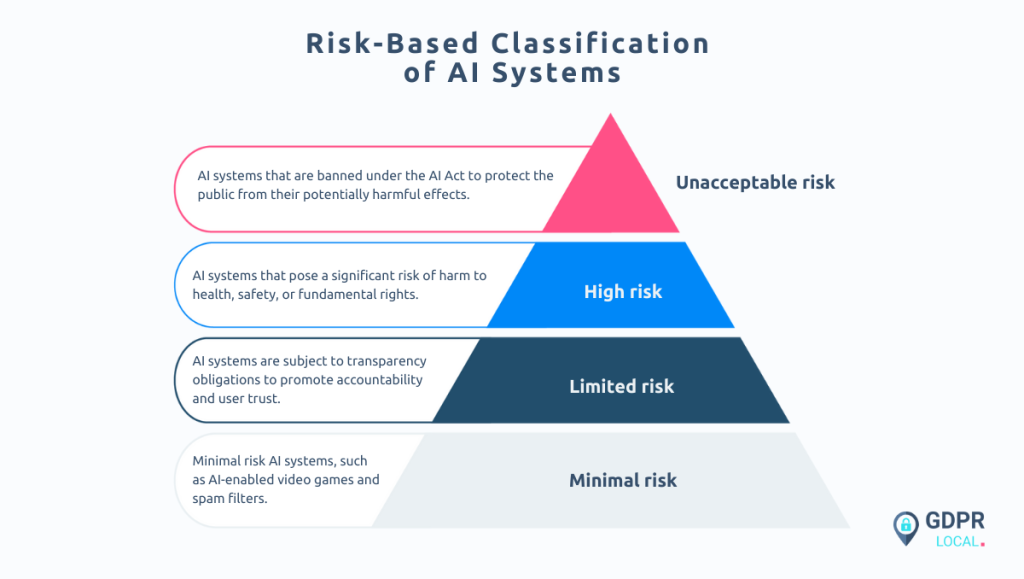

• AI systems are categorized into four risk levels (unacceptable, high, limited, and minimal), each with specific regulatory obligations designed to balance innovation with public safety.

• Transparency and accountability measures are central to the AI Act, requiring AI providers to inform users about AI interactions and ensuring ongoing compliance and monitoring of high-risk systems.

Understanding the Europe AI Act

The Europe AI Act is a foundation of the EU’s digital strategy, designed to foster the responsible development and use of AI technologies. As the first comprehensive legal framework on AI worldwide, it establishes harmonized rules for AI systems’ development, market placement, and use based on their risk levels. This pioneering legislation sets a global precedent, reflecting the EU’s commitment to ensuring that the EU Artificial Intelligence Act is developed and deployed ethically.

One of the Act’s defining features is its broad applicability. The regulations apply within the EU and extend to non-EU countries providing AI systems for the EU market. This uniformity means all AI systems within the EU meet stringent standards, promoting a level playing field and building trust in AI technologies. The AI Act entered into force on 1 August 2024, marking a significant milestone in the global regulation of AI.

The AI Act categorizes AI systems by risk levels, each with specific regulatory obligations. This risk-based approach ensures that the regulatory burden is proportional to the potential risks posed by the AI system. For instance, high-risk AI systems are subject to more stringent requirements compared to minimal risk systems. The Act also emphasizes the importance of human oversight to minimize potential harmful effects associated with AI technologies.

The legislation seeks to create a flexible definition of AI that can adapt to future technological advances. This forward-looking approach ensures that the AI Act remains relevant as AI technologies evolve. Ultimately, the AI Act aims to build trust in AI offerings among the European populace, ensuring that the benefits of AI are realized while safeguarding fundamental rights and values.

Risk-Based Classification of AI Systems

The AI Act classifies AI systems by potential risks, resulting in tailored regulatory obligations. This classification is a foundation of the Act, as it allows for a tailored regulatory approach that balances innovation with safety.

The risk categories include:

Each category comes with specific requirements designed to mitigate the identified risks.

AI developers and users must understand these risk levels. Unacceptable risk AI systems are outright banned due to their potential to cause significant harm, while high-risk AI systems face stringent regulatory requirements. Limited risk AI systems must adhere to transparency obligations to ensure user awareness and minimal risk AI systems operate with minimal regulatory intervention.

This nuanced approach enables the regulate ai systems in a manner that is both effective and proportionate.

Unacceptable Risk AI Systems

Unacceptable risk AI systems are those that pose a clear threat to individuals’ safety and rights. Under the AI Act, such systems are outright banned to protect the public from their potentially harmful effects. These prohibited AI practices include systems that could lead to significant violations of fundamental rights or endanger individuals’ safety. The prohibition of these systems underscores the EU’s commitment to safeguarding human dignity and rights in the face of advancing AI technologies.

The EU Commission and the AI Office are tasked with defining and reviewing these prohibited practices. This ongoing review process ensures that the list of unacceptable risk AI systems remains up-to-date with technological advancements and emerging threats. For instance, AI systems that manipulate human behaviour or exploit vulnerabilities in decision-making processes are likely candidates for prohibition due to their potential to cause systemic risks and undermine trust in AI.

The challenges posed by unacceptable risk AI systems are significant. These systems can create undesirable outcomes that are difficult to assess and mitigate. For example, AI technologies that lack transparency and fairness in decision-making processes can lead to discrimination and loss of trust.

By banning these high-risk applications, the AI Act aims to prevent systemic risks and promote trustworthy AI development.

High-Risk AI Systems

High-risk AI systems are defined as those that pose a significant risk of harm to health, safety, or fundamental rights. These systems are typically used in critical areas such as medical devices and other regulated products. The potential for these systems to influence behaviour, cause discrimination, or infringe on fundamental rights necessitates stringent regulatory oversight.

To mitigate these risks, high-risk AI systems must comply with horizontal mandatory requirements and undergo conformity assessment procedures. This includes ensuring high-quality datasets and implementing robust risk assessment processes. Such measures are designed to prevent harm and ensure that these systems operate safely and ethically.

Transparency is a key requirement for high-risk AI systems. Providers must provide detailed instructions to deployers about the system’s operation and capabilities. This ensures that users understand how to use these systems safely and effectively. Additionally, general-purpose AI models must create comprehensive documentation detailing their training and evaluation processes. This transparency is crucial for building user trust and ensuring informed decision-making.

Post-market monitoring is another critical aspect of regulating high-risk AI systems. Providers are required to implement systems for monitoring their AI technologies after they have been placed on the market. Any serious incidents or malfunctions must be reported to the relevant authorities.

The EU Commission can also adapt the list of high-risk applications in response to new circumstances, ensuring that the regulations remain relevant and effective. These requirements will apply after a transition period of three years, giving providers time to comply with the new rules.

Limited Risk AI Systems

Limited risk AI systems are subject to transparency obligations to promote accountability and user trust. These obligations ensure that users are informed when interacting with AI, reflecting the broader goal of ensuring informed engagements with technological tools. Users must be informed when interacting with AI systems that generate synthetic content or perform automated functions.

Transparency is essential for these systems to ensure that users can make informed decisions about their interactions with AI. This requirement supports the broader aim of the AI Act to foster trust in AI technologies and promote their responsible use.

Informing users about AI involvement helps mitigate risks and enhances user experience.

Minimal Risk AI Systems

Minimal risk AI systems, such as AI-enabled video games and spam filters, pose negligible risks and therefore operate with minimal regulatory intervention. These low-risk applications do not require stringent oversight under the AI Act. Their minimal risk status reflects their limited potential to cause harm or violate fundamental rights.

The freedom from stringent regulations allows these systems to innovate and develop without significant bureaucratic hurdles. This ensures the regulatory framework is proportionate, avoiding stifling innovation for low-risk AI applications.

The AI Act effectively allocates resources to where they are most needed by focusing regulatory efforts on higher-risk systems.

Transparency and Accountability Measures

Transparency and accountability are cornerstones of the AI Act, ensuring that AI systems operate in a manner that is understandable and trustworthy for users. AI systems interacting with humans must clearly inform users about the AI’s involvement unless it is obvious. This requirement helps users understand when they are engaging with AI, fostering a sense of trust and informed decision-making.

For AI systems that involve emotion recognition or processing personal data, users must be informed about their operation and the processing of their personal data, including remote biometric identification systems. This transparency is crucial for protecting individuals’ privacy and ensuring that AI systems are used responsibly.

Synthetic outputs generated by artificial intelligence must be machine-readable to indicate they are artificially created. This helps prevent the misuse of AI-generated content and ensures that users are aware of the nature of the content they are interacting with.

Those deploying AI systems that create deepfakes must disclose the artificial nature of the content. This requirement is part of the broader goal of ensuring traceability and explainability in AI systems. By making AI systems transparent and accountable, the AI Act aims to build user trust and promote the responsible use of AI technologies.

Governance and Enforcement

The AI Act’s governance and enforcement mechanisms ensure effective implementation and adherence across the EU. The European AI Office will coordinate with national authorities to oversee the implementation of the AI Act in member states. This collaborative approach ensures that the regulations are consistently applied and that any issues are promptly addressed.

National competent authorities are vital in managing compliance at the national level. They must report serious incidents involving high-risk AI systems to the European AI Office and ensure compliance with the AI Act. Market surveillance mechanisms are essential for monitoring high-risk AI systems and ensuring they comply with the regulations.

Market surveillance authorities will conduct compliance evaluations, particularly for high-risk AI systems. The AI Act allows for unannounced inspections to ensure that AI systems undergo necessary evaluations during real-world testing.

An EU database for high-risk AI systems will enhance transparency and allow public access to relevant information. In cases of non-compliance, the AI Act empowers authorities to enforce corrective actions, including withdrawal or recall of AI systems. Ongoing oversight and risk management by AI providers are essential for maintaining trust in AI systems even after their market introduction.

Supporting Innovation and Compliance

The AI Act prioritizes supporting innovation and ensuring compliance. The Act includes provisions for national authorities to create regulatory sandboxes and testing environments for pre-release AI system testing. These sandboxes provide a safe space for innovators to experiment and refine their AI technologies without the immediate pressure of full regulatory compliance.

The AI Act introduces support measures to reduce bureaucratic and financial challenges for small and medium-sized enterprises (SMEs). By providing targeted support, the Act aims to foster a vibrant AI ecosystem that encourages innovation while ensuring that SMEs can comply with regulatory requirements. Additionally, the AI Pact encourages early compliance with the AI Act by inviting developers to adopt its key obligations voluntarily.

The comprehensive AI Innovation Package aims to bolster the development of safe AI in Europe. This package includes various initiatives aimed at promoting the ethical and sustainable development of AI technologies while fostering international cooperation. By supporting innovation and ensuring compliance, the AI Act aims to create an environment where AI can thrive responsibly.

Future-Proofing AI Regulation

The AI Act is designed to adapt to rapid advancements in AI technology. This future-proof approach is crucial for maintaining the relevance and effectiveness of the Act over time. Incorporating a strategy for continuous evolution ensures the AI Act’s regulatory framework keeps pace with technological changes and new AI developments.

A key component of this strategy is ongoing quality and risk management even after AI systems have been placed on the market. This approach ensures AI providers continually monitor and mitigate risks, maintaining high safety and reliability standards. By allowing for adaptation through these measures, the AI Act fosters a dynamic regulatory environment that can respond to emerging challenges and opportunities in the AI landscape.

Trustworthiness and ethical development are central to the AI Act’s future-proofing strategy. By ensuring that regulations can evolve alongside technological advancements, the AI Act aims to promote the responsible use of AI while safeguarding fundamental rights and values. This forward-looking approach not only supports ongoing innovation but also reinforces the EU’s commitment to leading the global discourse on AI ethics and governance.

Summary

The Europe AI Act represents a pioneering effort to regulate AI in a manner that balances innovation with safety and ethical considerations. By categorizing AI systems based on their risk levels, the Act provides a nuanced regulatory framework that addresses the unique challenges posed by different types of AI technologies. From banning unacceptable risk AI systems to imposing stringent requirements on high-risk systems and ensuring transparency for limited risk systems, the AI Act aims to protect individual rights and safety while fostering trust in AI.

Looking ahead, the AI Act’s future-proof design ensures that it can adapt to technological advancements, maintaining its relevance and effectiveness over time. By supporting innovation through regulatory sandboxes and targeted measures for SMEs, the Act creates an environment where AI can thrive responsibly. As the world continues to grapple with the ethical and societal implications of AI, the Europe AI Act stands as a beacon of comprehensive and thoughtful regulation, paving the way for a future where AI is developed and used for the benefit of all.

Frequently Asked Questions

Will the EU AI Act apply to the UK?

The EU AI Act will not directly apply to the UK, but UK companies wishing to operate or sell AI products in the EU must comply with it, similar to the GDPR for data processing of EU citizens.

What is the current status of the EU AI Act?

The EU AI Act is set to enter into force on August 1, 2024, becoming effective from August 2, 2026, with certain provisions outlined in Article 113. Meanwhile, the AI Liability Directive is still in draft form, pending consideration by the European Parliament and the Council of the EU.

What is the Europe AI Act?

The Europe AI Act serves as the first comprehensive legal framework globally for regulating artificial intelligence, setting uniform rules for AI development and usage according to associated risk levels. This initiative seeks to ensure safe and trustworthy AI technologies across the European Union.

How does the AI Act categorize AI systems?

The AI Act categorizes AI systems into four risk levels: unacceptable risk, high risk, limited risk, and minimal risk, each imposing specific regulatory obligations. This structured approach ensures that the potential impact of AI systems is appropriately managed according to their associated risks.

What are unacceptable risk AI systems?

Unacceptable risk AI systems are defined as those that pose a direct threat to individuals’ safety and rights, leading to their prohibition under the AI Act to avert significant harm.