A Practical Guide to the EU AI Act for Operations Teams

Suppose your organisation uses or develops artificial intelligence (AI) systems in any capacity, such as logistics, customer support, or automated decision-making. In that case, the EU AI Act is set to change how you manage those technologies. While still under development, the Act proposes a risk-based framework to introduce new compliance requirements across various industries. This guide breaks down what ops teams need to know, from understanding AI risk classifications to practical steps you can take to prepare.

1. What Is the EU AI Act?

The EU AI Act aims to regulate AI across the European Union, classifying AI systems based on their potential risks to individuals and society. If your company operates in the EU or offers services to EU residents, you could be affected, even if you’re elsewhere. Since the Act is designed with extraterritorial reach in mind, it’s wise to get a head start on compliance.

For operations teams, this means verifying their existing AI systems, complying with the new standards, and making sure their future deployments follow the Act’s guidelines. By planning, you’ll minimise disruption once the rules become official.

2. Key Risk Classifications

Under the AI Act, AI systems are categorised into three primary risk levels:

1. Unacceptable Risk: These systems are outright banned for uses deemed a clear threat to individual safety or freedoms. An example might be social scoring systems that evaluate people based on personal traits in a discriminatory manner.

2. High Risk: This category will likely concern most ops teams. High-risk systems often relate to critical areas like healthcare, financial services, education, and law enforcement. A system that significantly influences someone’s access to essential services (e.g., credit approval, job screening) could also fall here.

3. Low and Minimal Risk: AI applications like chatbots or gaming algorithms often fall into this bracket. While they still need to respect transparency requirements and basic safety measures, they’re not as tightly regulated.

Ops teams should begin by reviewing which AI tools fall into these categories. If any of your systems might be “high-risk,” prepare for more rigorous documentation, auditing, and human oversight demands.

3. The Role of Operations Teams in AI Compliance

Implementing new regulations often lands in the operations department’s wheelhouse. Ops teams coordinate across various business units—like product, engineering, and compliance—to ensure smooth daily operations. The proposed EU AI Act adds specific tasks:

• Inventorying AI Systems: You’ll likely need to maintain an updated list of all AI tools, noting their purpose, data sources, and risk categories.

• Monitoring and Reporting: Consistent monitoring is required to oversee the performance and safety of AI systems. Ops teams should also prepare for potential audits.

• Collaboration with Legal and Compliance: Regularly sync with legal or compliance teams to incorporate new guidelines into standard operating procedures.

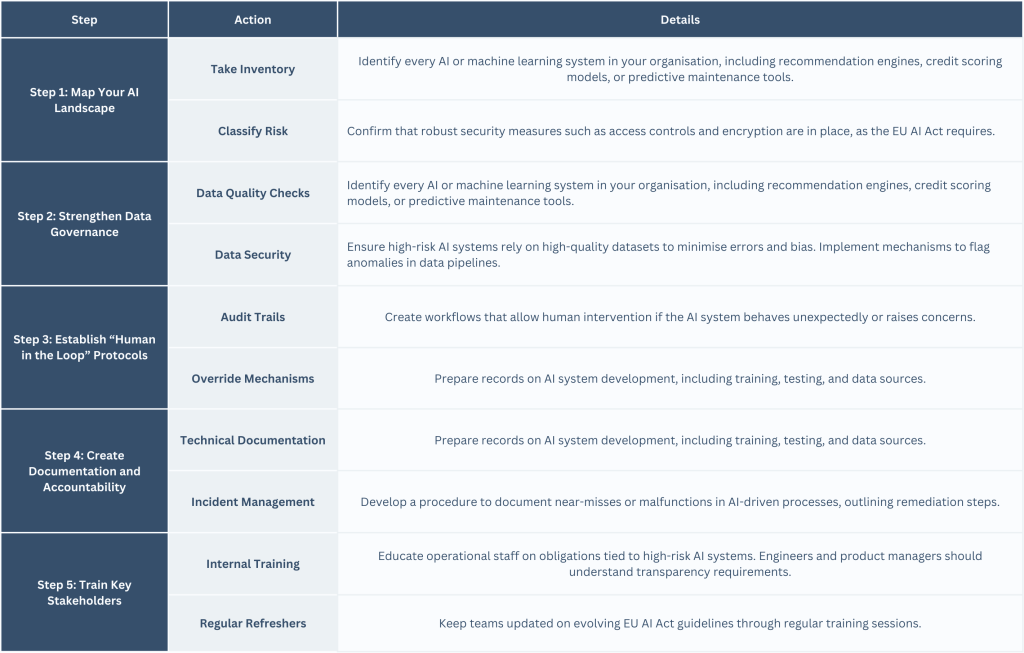

4. Practical Steps to Get Ready

While the Act isn’t finalised, the current version has enough clarity to start laying the groundwork.

5. Real-World Example: AI in Recruiting

Imagine your HR department uses AI to screen resumes or conduct video interviews. Such a tool could be classified as high-risk because it influences crucial employment decisions.

• Ops Team Role: You must coordinate with HR and IT to ensure the AI vendor provides transparency about how the model is trained (e.g., is there a bias toward specific demographics?). Also, \you must implement a system for HR staff to override AI decisions, particularly if the tool’s recommendation conflicts with internal fair hiring policies.

• Documentation: The operations manual for this AI tool would detail each workflow step, from input data (resumes, interview videos) to the AI’s decision output. Auditable logs would capture the final hiring verdict and any manual overrides.

Prepping ahead reduces the risk of legal complications when the EU AI Act’s high-risk rules are enforced in August 2026.

6. How the EU AI Act Intersects with GDPR

If you already comply with GDPR, you have a strong foundation. GDPR and the EU AI Act value data privacy, transparency, and accountability. However, the EU AI Act specifies technical documentation and “human in the loop” processes for higher-risk systems. Some key points of intersection:

• Data Processing: Ensure personal data used to train AI models aligns with GDPR principles (e.g., lawful basis for processing, minimal data collection).

• Individual Rights: GDPR gives individuals the right to understand how decisions about them are made. The AI Act will strengthen these rights further, especially around automated decisions.

Ops teams might find synergy in unifying privacy and AI compliance tasks, such as maintaining a single repository for data flow diagrams and AI risk assessments.

7. Future-Proofing Your AI Ops

The EU AI Act isn’t the only regulatory change on the horizon. Other jurisdictions, like the UK and the U.S., are also considering AI-related legislation. Here’s how you can future-proof your operations:

1. Standardised Documentation: Develop an internal standard for describing AI systems, risk levels, and data usage. This will help you adapt faster to new regulations.

2. Continuous Monitoring: Build metrics around AI performance, error rates, and user feedback. This approach helps you detect and report issues early if the law requires.

3. Cross-Functional Teams: Consider forming an “AI compliance committee” that meets periodically. This group can include ops, legal, IT security, and product leads.

8. Potential Timelines and Enforcement

While the exact enforcement date remains uncertain, the European Commission is pushing to finalise the EU AI Act soon. Once enacted, companies will have a transition period (often around two years) to become fully compliant. However, waiting until the last minute can cause chaos, particularly for ops teams responsible for day-to-day functions.

Conclusion

The EU AI Act represents a significant shift in how AI systems will be designed, deployed, and monitored – especially if they fall under the high-risk category. As operations teams are often the linchpin connecting technical, legal, and business units, you’re in a prime position to steer compliance efforts effectively.

Focus on mapping your AI systems, ensuring data quality and security, and establishing clear documentation and human oversight processes. These steps prepare you for future EU AI Act requirements and enhance the overall reliability of your AI solutions.

Remember, this legislation will continue to evolve. By closely monitoring regulatory developments and adopting a structured approach, your ops team can lead the charge in building AI systems that meet legal obligations while delivering real value to your business.

Need Guidance?

Suppose you need help interpreting the latest updates on the EU AI Act or want expert advice on implementing oversight measures. Companies like GDPRLocal can guide you in that case. Providing compliance is a team effort, but well-organised ops processes can make the journey more efficient.