How the EU AI Act Complements GDPR: A Compliance Guide

The EU AI Act has emerged as a groundbreaking piece of legislation. This new regulation aims to ensure the development and use of ethical AI systems across the European Union. We’ve seen how the General Data Protection Regulation (GDPR) has transformed data protection practices, and now the EU AI Act is set to have a similar impact on AI technologies.

In this article, we’ll explore how the EU AI Act complements the GDPR and what this means for businesses and organizations. We’ll break down the key differences between these two regulations, highlight where they overlap, and discuss the challenges and opportunities that come with compliance. By the end, you’ll have a clearer picture of how to align your AI practices with both the EU AI Act and GDPR requirements.

Key Differences Between the AI Act and GDPR

Scope and Applicability

We’ve noticed some key differences in how the EU AI Act and GDPR apply to organizations. The AI Act has a broader reach, covering providers, users, and other participants in the AI value chain. It applies to AI systems placed on or used in the EU market, regardless of where the companies are located. On the other hand, the GDPR focuses on controllers and processors that handle personal data related to EU activities or data subjects.

An interesting point to consider is that AI systems that don’t process personal data or that handle data of non-EU individuals can still fall under the AI Act but not the GDPR. This means we need to be aware of our obligations under both regulations, as they might not always overlap.

Focus Areas

When it comes to focus areas, we see some distinct differences between these two regulations. The GDPR is all about protecting the fundamental right to privacy. It gives individuals the power to exercise their rights against those processing their personal data. The AI Act, however, takes a different approach. It treats AI more like a product, aiming to regulate it through product standards.

This difference in focus has practical implications. For instance, if we want to stop an unlawful AI system that uses personal data, we’d do that under the AI Act. But if we need to exercise data subject rights related to personal data, that’s where the GDPR comes in.

Regulatory Approach

The regulatory approach of these two acts also differs significantly. In the AI Act, we see that providers and users have specific obligations, but the bulk of the regulatory burden falls on providers, especially for high-risk AI systems. This is different from the GDPR, where the responsibilities are distributed differently.

Under the GDPR, providers usually act as controllers during the development phase. In a B2B context, they’re more likely to be processors during the deployment phase. There’s also the possibility of providers and users being joint controllers if they determine the purpose and essential means of processing together.

One interesting aspect is how the AI Act handles human oversight. It requires providers to implement interface tools for human oversight and to take measures to ensure it happens. However, it doesn’t give specific details on what these measures should be, which leaves some room for interpretation.

Another key difference is in risk assessments. The GDPR requires controllers to carry out Data Protection Impact Assessments (DPIAs). The AI Act acknowledges this and actually builds on it. It states that users of high-risk AI systems should use the information provided by the system provider to conduct their DPIAs. This shows how the two regulations can complement each other in practice.

We also need to be aware of some potential conflicts between the two regulations. For example, the AI Act provides an explicit exemption from the GDPR’s prohibition on processing special category data in certain circumstances related to bias monitoring and correction in high-risk AI systems. This could lead to situations where we have conflicting requirements under the two regulations.

Overlapping Areas and Synergies

We’ve seen how the EU AI Act and GDPR have their distinct focuses, but there are also areas where they overlap and complement each other. Let’s explore these synergies and how they impact compliance strategies.

Data Protection Principles

The GDPR and the EU AI Act share some common ground when it comes to data protection principles. While the GDPR is built around principles like lawfulness, fairness, transparency, purpose limitation, and data minimization, the EU AI Act incorporates similar concepts, albeit with a focus on AI systems.

The EU AI Act’s principles, outlined in Recital 27, include human agency and oversight, technical robustness and safety, privacy and data governance, transparency, diversity, non-discrimination, fairness, and social and environmental wellbeing. These principles are influenced by the OECD AI Principles and the seven ethical principles for AI developed by the independent High-Level Expert Group on AI.

We can see how these principles materialize in specific EU AI Act obligations. For instance, Article 10 prescribes data governance practices for high-risk AI systems, Article 13 deals with transparency, and Articles 14 and 26 introduce human oversight and monitoring requirements.

Understanding these overlaps can help us leverage our existing GDPR compliance programs when implementing AI Act requirements. It’s a smart way to lower compliance costs and ensure a more holistic approach to data protection and AI governance.

Risk Assessment Requirements

Both the GDPR and the EU AI Act have risk assessment requirements, but they approach them differently. Under the GDPR, we’re required to carry out Data Protection Impact Assessments (DPIAs) for high-risk personal data processing activities. The EU AI Act, on the other hand, introduces conformity assessments for high-risk AI systems.

While these assessments serve different purposes, they can complement each other. For instance, the technical documentation drafted for conformity assessments under the AI Act can provide valuable context for a DPIA. Similarly, if we’re deploying a high-risk AI system, the information from the conformity assessment can inform our DPIA process.

The EU AI Act also introduces Fundamental Rights Impact Assessments (FRIAs) for some high-risk AI systems. FRIAs are conceptually similar to DPIAs, aiming to identify and mitigate risks to fundamental rights arising from AI system deployment. This overlap provides an opportunity to streamline our assessment processes.

It’s worth noting that the same AI system could potentially be subject to different risk management requirements and classifications under each law. This means we need to be thorough in our assessments and consider both regulatory frameworks.

Transparency Obligations

Transparency is a key principle in both the GDPR and the EU AI Act, but the AI Act expands on these requirements significantly. The AI Act sets out comprehensive transparency obligations for AI technology providers and deployers, which will co-exist with GDPR transparency requirements.

Under the AI Act, providers of high-risk AI systems must ensure their systems are designed and developed to be sufficiently transparent. This means providing enough information for deployers to understand how the system works, what data it processes, and how to explain the AI system’s decisions to users.

The AI Act also introduces specific transparency requirements for general-purpose AI systems. These include ensuring individuals are aware they’re interacting with an AI system (unless it’s obvious) and making sure synthetically generated audio, image, video, or text output is detectable as artificially generated.

We also see a push for systematic transparency through the creation of an EU database for high-risk AI systems. This database, to be set up and maintained by the Commission, aims to facilitate the work of EU authorities and enhance public transparency. By understanding these overlapping areas and synergies, we can develop more effective compliance strategies that address both GDPR and EU AI Act requirements. This integrated approach not only helps us meet our legal obligations but also builds trust with our users and stakeholders in the evolving landscape of AI and data protection.

Compliance Challenges and Opportunities

Adapting Existing GDPR Processes

We’re facing an interesting challenge as we explore the intersection of the GDPR and the EU AI Act. While the GDPR has already had a profound impact on how we develop, deploy, and use AI technologies, the AI Act is set to introduce even more concrete compliance obligations. This means we need to take a prudent and risk-focused approach to ensure we’re meeting the requirements of both regulations.

One of the key opportunities we have is to leverage our existing GDPR compliance processes. For instance, the Data Protection Impact Assessments (DPIAs) we’re already conducting under GDPR can serve as a foundation for the new assessments required by the AI Act. We can use the information from these DPIAs to inform our approach to the AI Act’s requirements, potentially saving time and resources.

New Obligations Under the AI Act

The AI Act introduces significant new obligations, especially for high-risk AI systems. As providers, companies need to establish risk management systems, ensure data quality, maintain technical documentation, implement human oversight, meet standards for accuracy, robustness and cybersecurity, set up post-market monitoring, and register our AI systems.

For those who are deployers of high-risk AI systems, our obligations are fewer but still important. We’ll need to focus on proper use and oversight of these systems. It’s crucial that we understand our role in the AI ecosystem, as this determines our specific obligations under the Act.

One of the challenges we’re facing is the requirement for human oversight. The AI Act mandates that we implement interface tools to enable human oversight, but it doesn’t provide specific guidance on what measures we should take . We’ll need to develop clear instructions and training for our human decision-makers on how the AI system works, what kind of data it uses, what results to expect, and how to evaluate the system’s recommendations.

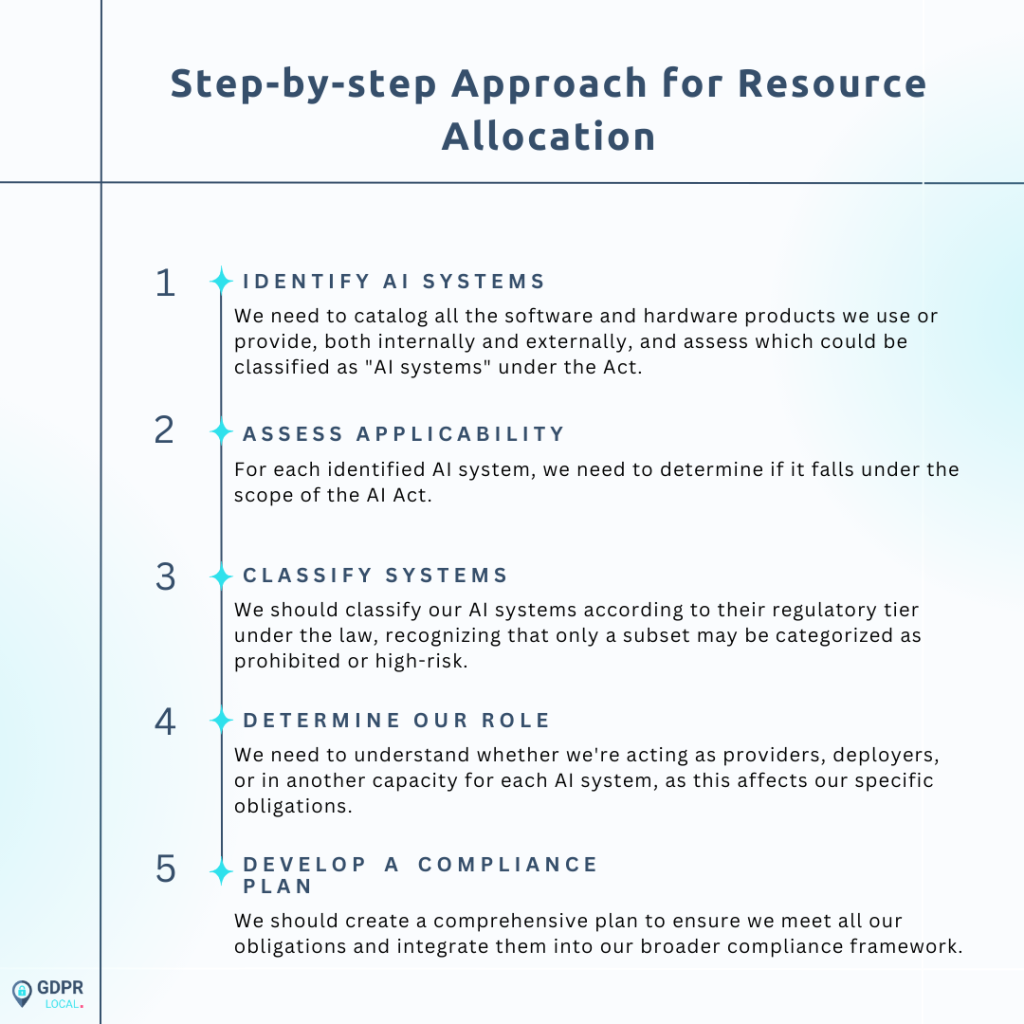

Resource Allocation

To meet these new obligations, we’ll need to allocate significant resources. Here’s a step-by-step approach we can take:

It’s worth noting that while we have about two years until the AI Act’s enforcement begins, the requirements are substantial. Our experience with GDPR has shown that starting compliance efforts just a few months before the rules become applicable can lead to significant challenges. Therefore, it’s in our best interest to start preparing as early as possible, especially if we’re providers of high-risk AI systems.

By taking these steps and allocating the necessary resources, we can turn these compliance challenges into opportunities to enhance our AI governance and build trust with our users and stakeholders.

Conclusion

The EU AI Act and GDPR are shaping a new regulatory landscape for AI technologies in Europe. These two pieces of legislation, while distinct in their focus, work together to ensure responsible AI development and use. The AI Act’s broader scope and product-centric approach complement the GDPR’s emphasis on personal data protection, creating a comprehensive framework for AI governance.

As we move forward, organizations face both challenges and opportunities in aligning with these regulations. By leveraging existing GDPR processes and allocating resources to meet new AI Act requirements, companies can build trust with users and stakeholders. This approach not only helps to meet legal obligations but also puts in place a foundation for ethical AI practices. In the end, the synergy between the EU AI Act and GDPR has an impact on the future of AI in Europe, fostering innovation while safeguarding fundamental rights.

FAQs

How do the AI Act and GDPR interact?

The AI Act and GDPR work in tandem to protect individuals. The AI Act focuses on preventing the misuse of AI systems, particularly those that handle personal data, while the GDPR is concerned with safeguarding the rights of data subjects regarding their personal data. Essentially, the AI Act addresses the regulation of AI systems, and the GDPR ensures individuals can exercise their rights over their personal data.

What role does AI play in adhering to GDPR requirements?

Under GDPR, AI systems that process personal data must obtain explicit consent from individuals. This consent must be freely given, specific, informed, and clear. Additionally, in certain situations, AI can process personal data under the premise of “legitimate interest,” which must also comply with GDPR standards.

What is the main goal of the EU AI Act?

The EU AI Act establishes the first legal framework specifically for AI, aiming to mitigate the risks associated with AI technologies and establish Europe as a leader in global AI regulation. It sets forth clear guidelines and responsibilities for AI developers and operators regarding the deployment and development of AI systems.

What are the requirements for compliance with the EU AI Act?

To comply with the EU AI Act, developers of General Purpose AI models must maintain comprehensive technical documentation of their AI models and make this information available to the AI Office upon request. They must also prepare documentation for providers who integrate these AI models, ensuring a balance between transparency and intellectual property protection. Additionally, it is necessary to implement policies that adhere to Union copyright laws.