How to Align AI with GDPR: A Compliance Strategy

Updated: October 2025

AI systems handle vast amounts of personal data, which creates new challenges for GDPR compliance. Companies that use AI solutions must comply with the regulatory rules. Breaking these rules can result in hefty fines up to €20 million or 4% of global revenue. The connection between GDPR and AI requires careful consideration to protect data while ensuring AI systems function properly.

Let’s discover how to arrange AI systems with GDPR rules and get the most from their capabilities.

GDPR Requirements for AI Systems

The GDPR doesn’t explicitly mention AI, but it contains many provisions that substantially affect AI applications.

Key GDPR principles affecting AI implementation

AI systems must follow several fundamental GDPR principles.

The regulation makes it clear that AI systems should:

• Process data transparently and accountably

• Collect only necessary data (data minimisation)

• Use data for specified, explicit purposes

• Maintain data accuracy and security

• Enable data subject rights

Purpose limitation can work well with AI development through flexible application, especially when data is reused for statistical purposes. Data minimisation needs careful thought to balance AI’s effectiveness with privacy protection.

Legal basis for AI data processing

AI systems need an appropriate legal basis to process personal data lawfully. The GDPR lists six legal grounds for processing, though not all suit AI applications equally well. The development and deployment phases of AI data processing need separate handling because they serve different purposes.

AI contexts face unique challenges with consent. Direct relationships with data subjects might make consent appropriate. Yet getting valid consent for complex AI operations can prove tricky. Organisations must ensure their consent process meets four criteria: freely given, specific, informed, and unambiguous.

Special categories of data considerations

GDPR demands extra protection for special category data. The regulation prohibits processing such data unless specific conditions are met.

AI systems handling sensitive data must pay attention to:

| Racial or ethnic origin | Political opinions | Religious beliefs | Biometric data | Health information |

Article 9 requirements need proper compliance and appropriate safeguards. AI applications that process special category data need both Article 6’s lawful basis and Article 9’s separate condition.

Privacy-enhancing technologies play a vital role in handling sensitive data. Anonymisation and pseudonymization techniques help protect individual privacy while AI systems learn from large datasets.

Implementing Privacy by Design in AI Development

Privacy by Design in AI systems requires proactive measures instead of reactive ones. Privacy safeguards should be built into AI systems from the start. This makes data protection a core part of development rather than an add-on.

Data minimisation strategies

Data minimisation serves as a basic principle in AI development. Data that is “adequate, relevant and limited to what is necessary” for specific business needs is being collected.

Here’s how to do it:

• Get a full picture of data needs before collection

• Create quick data collection processes that limit extra data fields

• Run regular data audits to confirm data relevance and necessity

Studies show that data minimisation practices protect user privacy and help build customer trust while meeting regulatory requirements.

Privacy-enhancing technologies for AI

Several privacy-enhancing technologies (PETs) play a vital role in protecting data-in-use processes in AI systems.

These technologies give options to:

• Work together securely while data serves its intended purpose

• Process private data without exposing sensitive contents

• Run trusted computation in untrusted environments

Federated Learning has become a promising solution that allows AI model training without centralising user data. Some tests show this approach significantly improves privacy, with only up to 15% extra CPU computation time and 18% extra memory usage.

Documentation requirements and compliance records

AI systems’ privacy measures should include complete documentation of:

• Specific reasons for data processing

• Legal grounds for processing operations

• Risk management practices and reduction techniques

We conduct Data Protection Impact Assessments (DPIAs) for high-risk processes as required by Article 35 of the GDPR. These assessments help us spot and reduce risks early in development.

Privacy by Design principles combine smoothly with AI systems’ architecture while keeping full functionality. This approach makes privacy protection about more than just following rules—it builds trust and creates secure AI systems that protect user privacy naturally.

Conducting AI-Specific Data Protection Impact Assessments

Data protection plays a critical role in AI systems. We have created a well-laid-out approach to conduct Data Protection Impact Assessments (DPIAs). AI implementation requires a DPIA in most cases, based on legal requirements.

Risk Assessment Methodology

A systematic review of the AI systems’ design, functionality, and effects forms the first step of the assessment process. Studies show ML development frameworks can contain up to 887,000 lines of code and depend on 137 external dependencies. This complexity defines the assessment scope.

Risk Assessments should follow these steps:

1. Identify AI systems and use cases within the organisation

2. Document data flows and processing stages

3. Assess effects on individual rights

4. Look for security vulnerabilities and threats

5. Review compliance requirements

Mitigation Strategies

Complete mitigation strategies tackle identified risks. Companies should focus on both technical and organisational measures:

• Security Controls: Secure pipelines connect development to deployment. ML development environments remain separate from core IT infrastructure.

• Data Protection: Training data goes through de-identification before internal or external sharing.

• Documentation: Clear audit trails help track control effectiveness and meet accountability needs.

Ongoing Monitoring and Review Processes

Live monitoring tools help maintain continuous compliance supervision and regular AI system audits.

These tools detect:

• Performance degradation

• Unexpected behaviours

• Security anomalies

• Data drift patterns

The DPIA serves as a ‘living’ document that needs regular updates. This becomes crucial when dealing with ‘concept drift’ as demographics or behaviour patterns change. Problems get fixed quickly as they appear.

Clear responsibilities exist throughout the organisation. Regular performance audits and impact assessments create feedback loops. This helps us learn from new insights and tackle emerging problems.

Managing Individual Rights in AI Systems

AI systems face unique challenges in managing individual rights. This requires a balanced approach to technical implementation and regulatory compliance. Building trust and transparency into AI systems matters more than just following the rules.

Right to explanation for automated decisions

Article 22 of GDPR states that people have the right to opt out of automated decisions that affect them legally. The strategy should focus on human oversight that aligns with EU AI Act requirements. High-risk AI systems need strong human-machine interface tools.

Data access and portability requirements

Strong systems handle both training data and deployment scenarios in data access management. People can access their ‘provided’ data when they exercise their right to data portability. This includes direct inputs and observed behavioural data.

Handling consent and withdrawal mechanisms

Ethical AI development needs transparent communication and respect for user priorities. This insight comes from our extensive research.

Valid consent must be:

| Voluntary and unmatched | Specific to defined purposes | Based on detailed information | As easy to withdraw as to give |

The biggest problem with consent mechanisms is revocability. This often leads to immediate data deletion requirements.

This can be solved by:

• Recording consent decisions precisely

• Adjusting data processing based on user priorities

• Keeping consent records current

• Offering detailed consent options for different data uses

Trust between users and technology providers grows with good consent management. This also ensures compliance with data protection regulations.

Ensuring Cross-Border Data Compliance

Cross-border data flows play a vital role in AI development and deployment. AI systems need massive amounts of training data. Restrictions on cross-border data transfers could significantly affect AI development. These restrictions limit access to training data and essential commercial services.

International data transfer requirements

The digital economy is growing rapidly, and cross-border compliance needs our attention.

Here are several key requirements we found for compliant data transfers:

• Data mirroring rules requiring local data copies

• Local storage requirements

• Processing location restrictions

• Country-specific transfer conditions

• Regulatory certification requirements

Governments often justify these digital trade barriers through various policy reasons. These include privacy protection, intellectual property rights, regulatory control, and national security. Risks should be addressed based on data sensitivity and processing context when compliant transfers are implemented.

Standard contractual clauses for AI

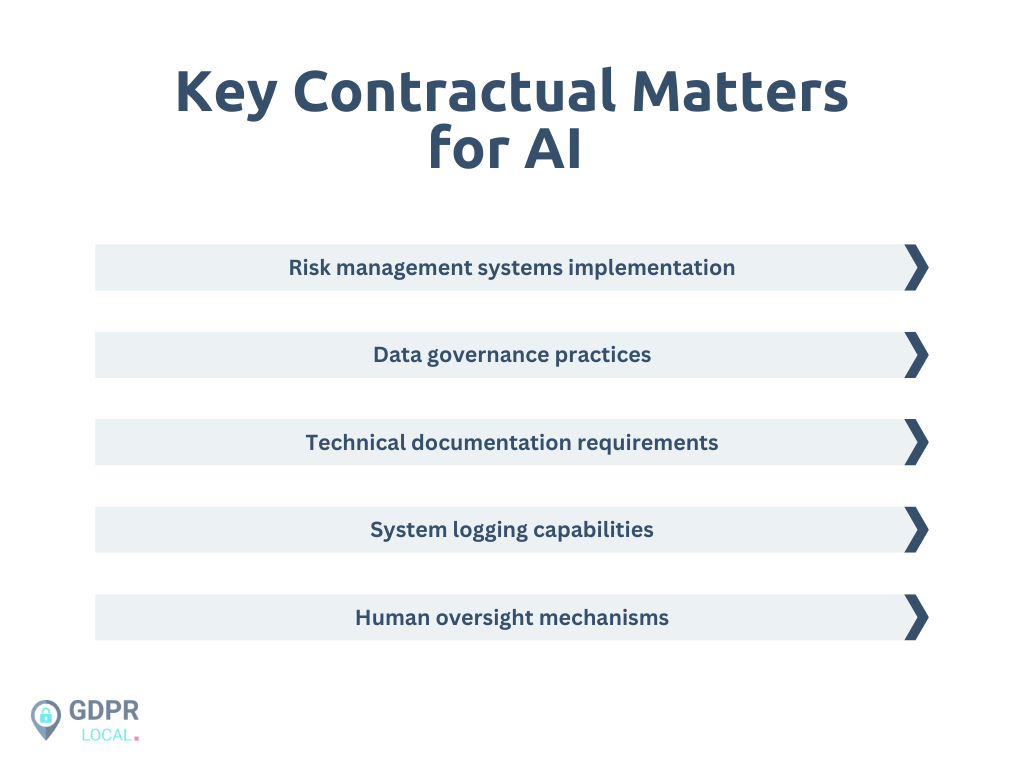

The European Union has drafted specific AI-related clauses addressing both high-risk and non-high-risk systems. The implementation strategy emphasises key contractual considerations, ensuring compliance and effectiveness.

These AI clauses cover critical areas like intellectual property rights, quality management systems, and cybersecurity requirements. Additionally, these clauses attach as schedules to primary agreements while you retain control over specific organisational needs.

Third-country adequacy considerations

Regulatory authorities help us assess third-country transfers through their adequacy decisions. The EU Commission has confirmed adequacy decisions for eleven countries, including:

| Switzerland | New Zealand | Israel | Canada | Argentina |

Countries under adequacy decisions often update their data protection laws to keep their status. To cite an instance, several countries strengthened their data protection frameworks. Others issued new regulations or guidelines to explain existing rules.

Countries without adequacy decisions need additional safeguards through:

• Standard contractual clauses

• Binding corporate rules

• Codes of conduct

• Certification mechanisms

AI needs to process huge volumes of data for training and insights. Success in cross-border compliance comes from balancing openness with risk management in a global industry. Compliant data flows and the enabling of AI innovation should be achieved through proper safeguards and regulatory compliance.

Conclusion

AI systems need careful attention to meet GDPR compliance requirements. Our detailed exploration shows how organisations can build compliant AI systems that work well and welcome state-of-the-art solutions.

Organisations that follow these guidelines can meet regulatory requirements and build trust with users. Our experience shows that good GDPR compliance makes AI systems stronger instead of limiting their capabilities.

Both AI technology and regulatory frameworks continue to evolve. You need a full assessment and updates of compliance measures. We suggest keeping detailed records of compliance work and creating clear communication channels between technical teams and data protection officers.

For assistance with aligning GDPR with AI regulations, feel free to reach out to us at [email protected].