Conducting a DPIA: Best Practices for AI Systems

Updated: July 2025

As AI systems become more complex and pervasive, the need to assess their impact on data protection has grown exponentially. This is where a Data Protection Impact Assessment (DPIA) comes into play.

What is a DPIA? It’s a process that helps organisations identify and minimise the data protection risks of a project or plan. For AI systems, a DPIA is not just a regulatory requirement but a vital tool to ensure responsible and ethical use of data.

We recognise that conducting a DPIA for AI systems can be a complex task. That’s why we’re here to guide you through the process. In this article, we’ll explore when a DPIA is necessary for AI systems, the steps involved in carrying out an effective assessment, and best practices to follow.

What is a Data Protection Impact Assessment (DPIA)?

A Data Protection Impact Assessment (DPIA) is a vital process that helps us systematically analyse, identify, and minimise the data protection risks of a project or plan. It’s a key part of our accountability obligations under the UK GDPR, and when done properly, it helps us assess and demonstrate how we comply with all our data protection obligations. The DPIA is designed to be a flexible and scalable tool that we can apply to a wide range of sectors and projects.

Purpose of conducting a DPIA

The main purpose of conducting a DPIA is to ensure that we’re protecting individuals’ privacy and data rights. It’s not just a compliance exercise; it’s an effective way to identify and fix problems at an early stage, bringing broader benefits for both individuals and our organisation. By carrying out a DPIA, we can:

• Increase awareness of privacy and data protection issues within our organisation

• Ensure that all relevant staff involved in designing projects think about privacy at the early stages

• Adopt a ‘data protection by design’ approach

• Improve transparency and make it easier for individuals to understand how and why we’re using their information

• Build trust and engagement with the people using our services

• Reduce ongoing costs of a project by minimising the amount of information we collect, where possible

Key components of a DPIA

A comprehensive DPIA typically includes the following key components:

1. Description of the processing: We need to outline how and why we plan to use personal data. This includes the nature, scope, context, and purposes of the processing.

2. Assessment of necessity and proportionality: We must consider whether our plans help to achieve our purpose and if there’s any other reasonable way to achieve the same result.

3. Identification and assessment of risks: We need to consider the potential impact on individuals and any harm or damage our processing may cause, whether physical, emotional, or material.

4. Identification of mitigating measures: For each risk identified, we should record its source and consider options for reducing that risk.

5. Consultation: We should seek and document the views of individuals (or their representatives) unless there’s a good reason not to.

It’s important to note that a DPIA is not a one-off exercise. We need to keep it under review and reassess if anything changes. If we make any significant changes to how or why we process personal data, or to the amount of data we collect, we need to show that our DPIA assesses any new risks.

When is a DPIA Required for AI Systems?

As AI systems become more prevalent, it’s crucial to understand when a Data Protection Impact Assessment (DPIA) is necessary. In most cases, the use of AI involves processing that’s likely to result in a high risk to individuals’ rights and freedoms, triggering the legal requirement for a DPIA. However, we need to assess this on a case-by-case basis.

High-risk AI applications

The UK GDPR outlines specific scenarios where a DPIA is mandatory. These include:

1. Systematic and extensive profiling with significant effects

2. Large-scale use of sensitive data

3. Public monitoring on a large scale

For AI systems, these scenarios often apply. For instance, if we’re using AI for automated decision-making that produces legal effects or similarly significant impacts on individuals, we must conduct a DPIA. This could include AI-powered recruitment systems or credit scoring algorithms.

Large-scale data processing

AI systems often rely on processing vast amounts of data, which can trigger the need for a DPIA. The European Data Protection Board (EDPB) has identified several criteria that indicate high-risk processing. If our AI system meets at least two of these criteria, we should presume a DPIA is necessary. Some relevant criteria include:

• Processing sensitive or highly personal data

• Large-scale data collection

• Combining or matching datasets

For example, if we’re developing a healthcare AI that processes medical records on a large scale, we’d need to conduct a DPIA due to the sensitive nature and volume of the data involved.

Innovative technologies

The use of innovative technologies, including AI, is another factor that can necessitate a DPIA. This is particularly true when combined with other high-risk factors. The ICO considers AI an innovative technology that requires a DPIA when used in conjunction with other criteria from the European guidelines.

Examples of innovative AI applications that might require a DPIA include:

| Machine learning and deep learning systems | AI-powered autonomous vehicles | Intelligent transport systems | Smart technologies, including wearables | Some Internet of Things (IoT) applications |

It’s important to note that “innovative” doesn’t just mean cutting-edge. If we’re implementing existing technology in a new way, this could also result in high risks that require assessment through a DPIA.

When considering whether our AI system requires a DPIA, we should also think about potential harms beyond just data protection. This includes both allocative harms (e.g., loss of opportunities or resources) and representational harms (e.g., stereotyping or denigration of certain groups).

If, after conducting a DPIA, we find that there are residual high risks to individuals that we can’t sufficiently reduce, we must consult with the ICO before starting the processing.

In conclusion, given the complex nature of AI systems and their potential impact on individuals’ rights and freedoms, it’s often safer to err on the side of caution and conduct a DPIA. This process not only helps us comply with legal requirements but also ensures we’re using AI responsibly and ethically.

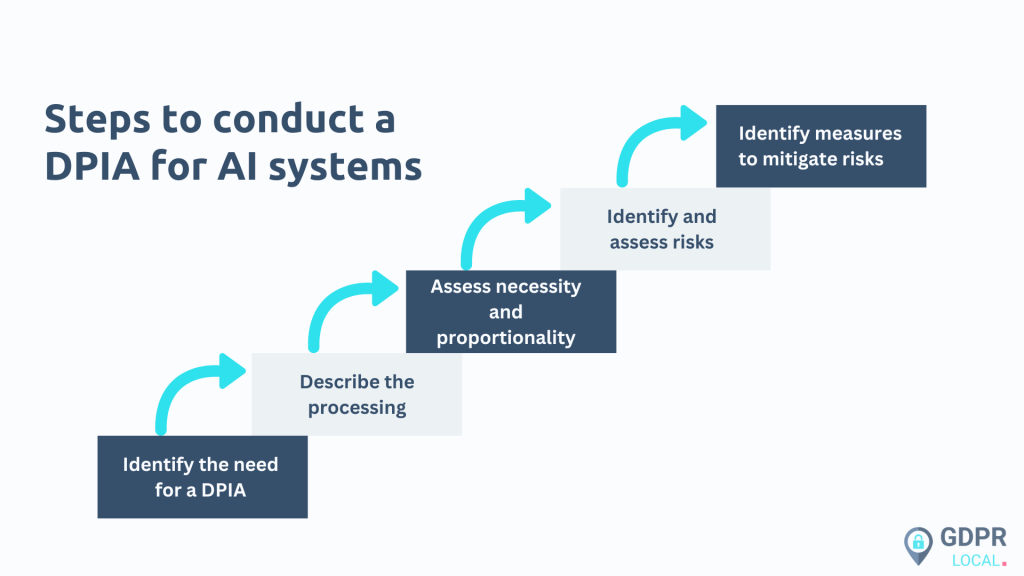

Steps to Conduct a DPIA for AI Systems

Conducting a Data Protection Impact Assessment (DPIA) for AI systems is a crucial process that helps us identify and minimise data protection risks. Let’s walk through the key steps to carry out an effective DPIA for our AI projects.

Step one – Identify the need for a DPIA

The first step is to determine if a DPIA is necessary. For AI systems, it’s often required due to the high-risk nature of the processing. We need to consider factors such as:

• The scale and scope of data processing

• The use of new or innovative technologies

• The potential impact on individuals’ rights and freedoms

If our AI system involves systematic profiling, large-scale processing of sensitive data, or public monitoring, we definitely need to conduct a DPIA.

Step two – Describe the processing

Once we’ve established the need for a DPIA, we need to provide a detailed description of how we plan to use personal data in our AI system. This includes:

• What data we’ll process and its source

• How we’ll collect, store, and use the data

• The volume, variety, and sensitivity of the data

• Who the individuals are and our relationship with them

• The intended outcomes for our organisation, individuals, or wider society

We should also outline the data flows and indicate the stages at which our AI processing might affect individuals.

Step three – Assess necessity and proportionality

In this step, we need to evaluate whether our plans are necessary and proportionate to achieve our purpose. We should consider:

• Is AI necessary to achieve our objective?

• Are there less intrusive ways to achieve the same goal?

• Is our interest in using AI balanced against the risks to individuals’ rights and freedoms?

We need to justify any trade-offs we make, such as not reducing the amount of data processed to maintain better statistical accuracy.

Step four – Identify and assess risks

This is a critical component of the DPIA process. We need to analyse potential risks to individuals’ rights and freedoms that may arise from our AI system. We should consider both allocative harms (e.g., loss of opportunities or resources) and representational harms (e.g., stereotyping or denigration of certain groups).

Some risks to assess include:

• Inability to exercise rights

• Financial loss

• Loss of control over personal data

• Reputational damage

• Discrimination

• Physical harm

• Identity theft or fraud

• Loss of confidentiality

It’s important to document the likelihood and severity of these risks, assigning a score to each.

Step five – Identify measures to mitigate risks

Finally, we need to identify measures to mitigate the risks we’ve identified. For each risk, we should:

1. Record its source

2. Consider options for reducing the risk

Some examples of safeguards we can implement include:

• Training staff to anticipate and manage risks

• Anonymising or pseudonymizing data where possible

• Implementing clear data-sharing agreements

• Making changes to privacy notices

• Offering individuals the chance to opt out where appropriate

It’s crucial to note that we don’t always have to eliminate every risk. However, if there’s still a high risk after taking additional measures, we need to consult with the ICO before proceeding with the processing.

By following these steps, we can conduct a thorough DPIA that helps us identify and address data protection risks effectively. Remember, a DPIA is not just a compliance exercise; it’s a valuable tool that supports the principle of data protection by design and default, as required by Article 25 of the UK GDPR.

Our goal is to create a process that works for us while ensuring we’re thoroughly assessing and addressing data protection risks in our AI systems. By doing so, we’re not just complying with legal requirements but also building trust with the individuals whose data we process.

Conclusion

Conducting a DPIA for AI systems is a crucial step to ensure responsible and ethical use of data.

The DPIA process for AI is not a one-time task but an ongoing effort that requires regular review and updates. As AI technologies continue to evolve, so too must our approaches to data protection and risk assessment. By making DPIAs a fundamental part of AI development, we can create a future where innovation and privacy go hand in hand, fostering a more responsible and trustworthy AI landscape for everyone.

For assistance with conducting a DPIA for your AI systems, make sure to contact us at [email protected].

FAQs

What exactly is a DPIA?

A Data Protection Impact Assessment (DPIA) is a process aimed at identifying and minimizing potential risks related to personal data processing. It’s a crucial tool for mitigating risks and proving adherence to the General Data Protection Regulation (GDPR).

Can you provide an example of a completed DPIA?

A completed DPIA is necessary for any project that processes personal data or could affect individual privacy. For instance, this might include the development of a new IT system that handles or accesses employee personal data.

How does a DPIA differ from GDPR?

While the GDPR is a broad regulation governing data protection across the EU, a DPIA is a specific requirement under the GDPR that must be conducted when data processing or the use of new technology poses a high risk to individuals’ rights and freedoms. DPIAs focus on analysing how personal data is processed and utilised, and they are performed before any data processing activities begin.

What is a privacy impact assessment in the context of AI?

A privacy impact assessment (PIA) for AI systems involves identifying and managing privacy risks associated with the use of AI technologies, particularly those handling personal and health information. This assessment is guided by existing laws such as the PPIP Act and HRIP Act, which dictate the proper management of such information.