Automated Decision-Making and Regulatory Legislation in AI Systems

Update: September 2025

The Dilemma of Automated Decision-Making

At the heart of AI systems lies the promise of automated decision-making, driven by sophisticated algorithms that can process large amounts of data and make predictions with unprecedented accuracy. From healthcare diagnostics to financial risk assessment, automated decision-making has the potential to revolutionise numerous domains, enhancing efficiency and driving innovation.

Key Takeaways

- Regulatory compliance requires proactive risk management through regular audits, transparent documentation, and clear accountability frameworks for AI decisions.

- Data quality and bias mitigation are central to meeting both the EU AI Act and GDPR requirements for fair and accurate automated processing.

- Human oversight mechanisms must be implemented for high-risk AI systems to protect individual rights and meet legal obligations.

The autonomy inherent in automated decision-making raises critical concerns regarding accountability, transparency, and ethical implications. Without proper oversight, AI algorithms may perpetuate biases, discriminate against certain groups, or make decisions that contravene legal and ethical standards. This dilemma underscores the pressing need for regulatory frameworks to govern the development, deployment, and use of AI systems.

The Role of Legislation in Regulating AI Systems

Recognising the potential risks and challenges posed by AI systems, governments and regulatory bodies around the world have sought to establish legislation to govern their use. These regulatory frameworks aim to ensure transparency, accountability, fairness, and respect for human rights in the development and deployment of AI technologies.

Legislation such as the European Union’s AI Act, the General Data Protection Regulation (GDPR), and the United States’ Algorithmic Accountability Act, among others, set forth guidelines and requirements for AI developers and users. These regulations address various aspects of AI governance, including data privacy, algorithmic transparency, bias mitigation, and accountability for automated decisions.

Understanding Current Regulatory Requirements

GDPR and Automated Decision-Making

The General Data Protection Regulation establishes strict requirements for automated decision-making involving personal data, particularly through Article 22, which gives individuals the right not to be subject to decisions based solely on automated processing that produce legal effects or have a similarly significant impact. Organisations can only implement such systems when they are necessary for contract performance, authorised by law, or based on explicit consent.

EU AI Act Implementation

The EU AI Act, which became legally binding in August 2024 with phased implementation through 2027, creates a comprehensive risk-based framework for AI systems. High-risk AI applications in sectors like healthcare, law enforcement, and human resources face the strictest requirements, including rigorous risk assessments, bias detection protocols, and mandatory human oversight. General-Purpose AI (GPAI) models became subject to transparency and documentation requirements starting August 2025.

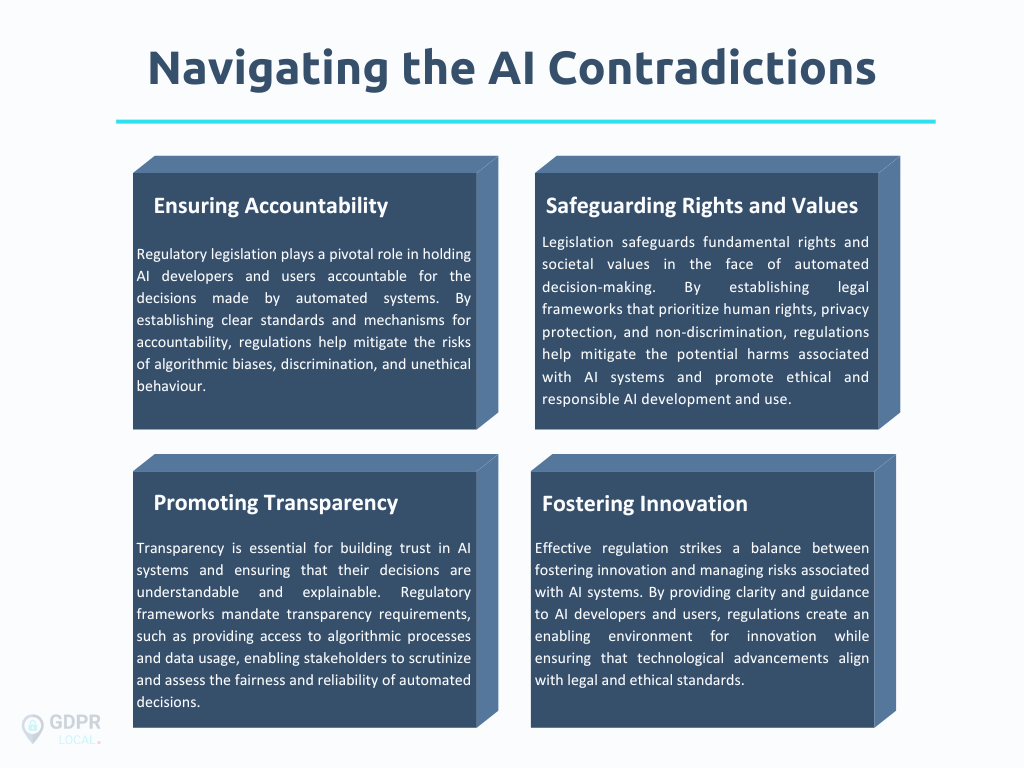

Navigating the AI Contradictions

The contradictions between automated decision-making and regulatory legislation in AI systems pose significant challenges that require careful navigation. Organisations must strike a balance between innovation and compliance requirements across multiple regulatory frameworks.

Practical Compliance Strategies

Risk Assessment and Classification

Organisations must conduct comprehensive audits to identify and classify all AI systems within their operations. This inventory should categorise systems based on risk levels, use cases, and regulatory scope. High-risk AI systems require conformity assessments, technical documentation, and ongoing monitoring protocols.

Data Quality and Bias Mitigation

Bias detection and mitigation represent core compliance requirements across multiple jurisdictions. Organisations should implement:

- Data preprocessing techniques to identify and correct biased datasets before training models

- Algorithmic fairness constraints that explicitly prevent discriminatory outcomes during model development

- Post-processing adjustments to calibrate AI outputs for equitable treatment across demographic groups

- Regular auditing procedures to monitor AI performance and detect emerging bias patterns over time

Transparency and Explainability

Regulatory frameworks increasingly demand that AI systems provide understandable explanations for their decisions. Organisations must document model training methodologies, data sources, and decision-making processes. For GDPR compliance, individuals have the right to obtain explanations of automated decisions affecting them.

Human Oversight Implementation

Both GDPR and the EU AI Act require meaningful human intervention capabilities for high-risk automated decisions. This includes establishing clear escalation procedures, training staff on the limitations of AI, and providing accessible mechanisms for individuals to challenge automated decisions.

Organizational Preparedness

AI Literacy Requirements

The EU AI Act requires organisations to maintain adequate AI literacy among employees involved in the operation and deployment of AI systems. This requirement, effective since February 2025, applies to both AI providers and users across all sectors. Training programs should cover AI risk management, ethical considerations, and regulatory compliance requirements.

Governance Structures

Effective AI governance requires multidisciplinary teams that include legal, compliance, technical, and business stakeholders. Organisations should establish clear roles and responsibilities for AI oversight, implement regular review processes, and create accountability frameworks for AI-related decisions.

Documentation and Record-Keeping

Comprehensive documentation supports both compliance efforts and operational improvements. Organisations should maintain records of AI system performance, bias assessments, risk evaluations, and remediation actions taken to address identified issues.

Managing Implementation Challenges

The intersection of innovation and regulation creates practical challenges for organisations seeking to leverage AI capabilities responsibly. Companies must balance speed-to-market pressures with thorough compliance processes, often requiring significant investments in governance infrastructure and technical capabilities.

Cross-border operations face added complexity as different jurisdictions implement varying requirements and timelines. Organisations operating globally must develop flexible compliance frameworks that can adapt to multiple regulatory environments.

The contradictory nature of automated decision-making and regulatory legislation in AI systems highlights the complex interplay between technological advancement and societal values. Effective regulation is crucial in navigating these contradictions, encouraging the development of ethical and responsible AI, and protecting the rights and interests of individuals and society as a whole. As we advance AI, we must strike a balance between innovation and regulation to guarantee fairness, accountability, and transparency for the common good.

FAQs

What specific documentation must organisations maintain to ensure compliance with the EU AI Act?

Organisations must maintain complete technical documentation, including model architecture details, training methodologies, risk assessments, and results of bias testing. For GPAI models, providers must publicly disclose training data sources, model capabilities, and known limitations. High-risk systems require conformity assessments and continuous monitoring records.

How does GDPR Article 22 apply to AI systems used in hiring and employment decisions?

GDPR Article 22 restricts solely automated hiring decisions that significantly affect individuals, requiring explicit consent, legal authorisation, or contractual necessity. Organisations must provide human review opportunities, clearly explain the decision criteria, and allow individuals to contest the outcomes. Special protections apply when processing sensitive personal data categories.

What are the most effective strategies for detecting and mitigating AI bias in operational systems?

Organisations should implement diverse data collection practices, conduct regular algorithmic audits using fairness metrics, and establish procedures for adversarial testing. Effective approaches include preprocessing data for representativeness, incorporating fairness constraints during model training, and implementing post-processing adjustments to correct biased outputs. Cross-functional teams with diverse perspectives improve bias identification capabilities.

If you require any assistance with the EU AI Act, please don’t hesitate to contact us.